A Deep Dive into the Architecture of GPT-3 and GPT-4

GPT models have revolutionized the field of artificial intelligence, particularly in natural language processing. Understanding the architecture of these models is crucial for grasping their capabilities and potential applications. The Architecture of GPT-3, for instance, showcases a sophisticated transformer design that enables advanced text generation and language understanding. GPT-4 builds upon this foundation, offering enhanced performance and adaptability. Its larger model size allows it to achieve a 40% higher accuracy than its predecessors, making it a significant advancement in AI technology. This blog delves into the intricacies of GPT-3 and GPT-4, highlighting their architectural innovations.

Architecture of GPT-3

Model Structure

Transformer Architecture

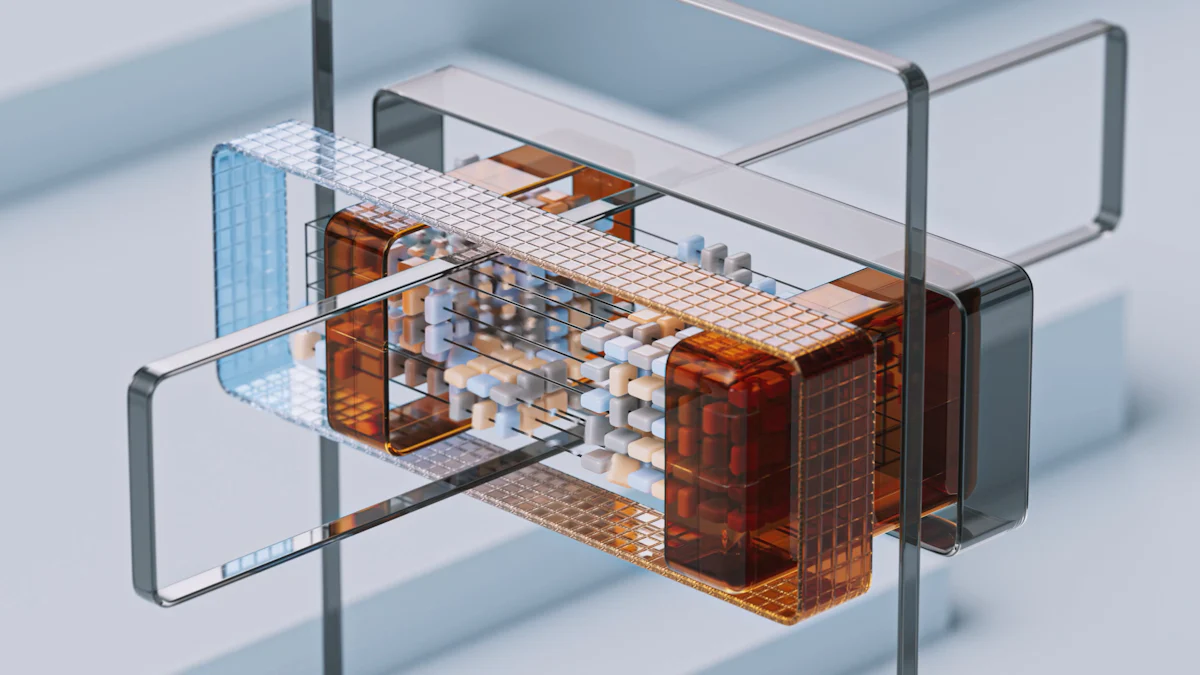

The Architecture of GPT-3 relies heavily on the transformer model, a groundbreaking innovation in natural language processing. This architecture consists of multiple layers of transformer blocks. Each block uses a mechanism called multi-headed self-attention. This mechanism allows the model to focus on different parts of the input text simultaneously. By doing so, it captures complex relationships between words and phrases. The transformer architecture enables GPT-3 to understand context more effectively than previous models.

Training Data and Parameters

GPT-3's training involved an extensive dataset, comprising diverse sources from the internet. This dataset includes books, articles, and websites, providing a rich variety of language patterns and contexts. The model boasts 175 billion parameters, which are adjustable weights that help the model learn from data. These parameters make GPT-3 one of the largest language models ever created. The vast number of parameters allows it to generate text with remarkable fluency and coherence.

Functionality and Capabilities

Language Understanding

GPT-3 excels in understanding language due to its sophisticated architecture. It can comprehend nuanced meanings and infer context from limited information. This ability stems from its extensive training and the transformer architecture's design. Users find GPT-3 effective in tasks like summarizing texts, answering questions, and even translating languages. Its proficiency in language understanding makes it a valuable tool for various applications.

Text Generation

The text generation capabilities of GPT-3 are impressive. It can produce human-like text that is coherent and contextually relevant. This feature results from its large parameter set and the diverse training data it has processed. GPT-3 can generate creative content, write essays, and even compose poetry. Its ability to mimic human writing styles makes it a powerful asset in content creation and other fields requiring natural language generation.

Overview of GPT Models

The journey of GPT models has been marked by significant advancements in artificial intelligence, particularly in natural language processing. Each iteration has built upon the previous, enhancing capabilities and expanding applications.

Evolution of GPT Models

From GPT-1 to GPT-2

The evolution of GPT models began with GPT-1, which introduced the concept of transformer-based architecture. This model laid the groundwork for future developments by demonstrating the potential of transformers in understanding and generating human-like text. GPT-2 followed, significantly increasing the number of parameters and improving the model's ability to generate coherent and contextually relevant text. The Architecture of GPT-3 further expanded on these foundations, introducing a massive leap in scale and capability. evolution of GPT models

Advancements in GPT-3

GPT-3 marked a pivotal moment in the evolution of language models. With 175 billion parameters, it became one of the largest models ever created. The Architecture of GPT-3 utilized a sophisticated transformer design, enabling it to perform a wide range of tasks with remarkable fluency. Its ability to understand and generate text with human-like accuracy set a new standard in AI technology. The model's extensive training data allowed it to excel in language understanding and text generation, making it a versatile tool for various applications.

Introduction to GPT-4

Key Features of GPT-4

Released in March 2023, GPT-4 represents a significant advancement over its predecessors. As a large multimodal model, it can process not only text but also images, audio, and video. This capability enhances its versatility and allows it to solve complex problems with greater accuracy. The Architecture of GPT-3 served as a foundation for GPT-4, which introduced improvements in contextual understanding and reasoning abilities. These enhancements make GPT-4 a powerful tool for tackling challenging tasks across different domains.

Improvements over GPT-3

GPT-4 builds upon the strengths of the Architecture of GPT-3, refining and enhancing its capabilities. It offers a 40% higher accuracy rate, thanks to its broader general knowledge and advanced reasoning skills. The model's efficiency has also improved, allowing it to process information more quickly and accurately. These advancements make GPT-4 a significant leap forward in the field of AI, pushing the boundaries of what is possible with language models.

Technical Architecture of GPT-4

Enhanced Model Structure

Innovations in Transformer Design

GPT-4 represents a significant leap in AI technology, building upon the Architecture of GPT-3 with notable innovations. The innovations in transformer design in GPT-4 incorporate advanced mechanisms that enhance its ability to process and understand language. These innovations reduce irrelevant or nonsensical outputs, making the model more reliable for users. The fine-tuning mechanisms have been improved, allowing GPT-4 to adapt better to specific tasks and industries. This adaptability stems from optimized algorithms that reduce resource consumption, making the model more accessible and cost-effective.

Expanded Training Data

The Architecture of GPT-3 laid the groundwork for GPT-4's expanded training data. GPT-4 was trained on a dataset that is larger and more diverse than its predecessor. This extensive dataset includes a wide range of sources, enabling the model to generate more accurate and contextually relevant responses. The training involved 100 trillion parameters, equating to the complexity of the human brain in terms of parameters. This vast number of parameters allows GPT-4 to process information with greater depth and understanding, enhancing its overall performance.

Advanced Capabilities

Improved Language Processing

GPT-4's language processing capabilities surpass those of the Architecture of GPT-3. The model excels in providing concise responses and structured scientific explanations. It demonstrates superior reasoning abilities and creativity, making it a valuable tool for various applications. GPT-4's memory capacity has increased significantly, with 32,000 tokens compared to GPT-3.5's 4,000 tokens. This improvement allows the model to handle more complex tasks and deliver faster responses, enhancing its efficiency and effectiveness.

Enhanced Text Generation

The text generation capabilities of GPT-4 have been refined, building on the strengths of the Architecture of GPT-3. GPT-4 can produce creative writing, comprehensive programming assistance, and detailed literary analysis. Its ability to generate human-like text with coherence and relevance makes it a powerful asset in content creation. Additionally, GPT-4 introduces multimodal capabilities, allowing it to process images, audio, and video. This versatility pushes the boundaries of what language models can achieve, offering new possibilities for applications across different domains.

Comparative Analysis of GPT-3 and GPT-4

Performance Metrics

Accuracy and Efficiency

GPT-4 demonstrates significant improvements in accuracy and efficiency over GPT-3. It scored 40% higher on accuracy evaluations, showcasing its enhanced ability to understand and generate language. This improvement stems from its larger dataset and advanced transformer architecture. GPT-4 processes information more efficiently, thanks to its 100 trillion parameters, which is equivalent to the complexity of the human brain. This vast number of parameters allows GPT-4 to deliver more coherent and contextually accurate responses compared to GPT-3.

Computational Requirements

The computational requirements for GPT-4 are more demanding than those for GPT-3. GPT-4's expanded capabilities and larger model size necessitate greater memory and processing power. It has a memory capacity of 32,000 tokens, significantly surpassing GPT-3's 4,000 tokens. This increase enables GPT-4 to handle more complex tasks and longer context windows. However, these advancements come at a cost. GPT-4's API pricing is higher, with an 8K context model completion costing 30 times more than GPT-3.5. Despite the increased computational demands, GPT-4's superior performance justifies the investment for many applications.

Use Cases and Applications

Industry Applications

GPT-4's enhanced capabilities make it a valuable asset across various industries. Its multimodal abilities allow it to process not only text but also images, audio, and video. This versatility opens new possibilities for applications in fields such as healthcare, finance, and entertainment. GPT-4 excels in tasks that require advanced reasoning, creativity, and adaptability. Industries benefit from its ability to generate high-quality content, provide detailed analyses, and offer innovative solutions to complex problems.

Research and Development

In research and development, GPT-4 sets new standards for natural language understanding and generation. Its superior reasoning abilities and contextual accuracy make it an ideal tool for academic research, scientific exploration, and technological innovation. Researchers leverage GPT-4's capabilities to conduct experiments, analyze data, and develop new theories. Its ability to process longer contexts and generate high-quality responses enhances the efficiency and effectiveness of research projects. GPT-4's advancements push the boundaries of what is possible in AI, paving the way for future innovations.

GPT-3 and GPT-4 exhibit distinct architectural differences. GPT-4's multimodal capabilities and expanded parameters mark a significant leap from GPT-3. These advancements have reshaped the AI landscape, enhancing language processing and text generation. However, ethical concerns persist, such as potential misuse and data privacy issues. Future GPT models will likely focus on improving ethical safeguards and reducing biases. As AI technology evolves, these models will continue to push boundaries, offering new possibilities while addressing societal and ethical challenges.

See Also

Exploring the Functionality of GPT-4

The Progression of Generative AI Models: GPT-1 to GPT-4

Insight into Deep Learning for Generative AI