How Transformer Models Work in Generative AI

You might wonder how Transformer Models have become a cornerstone in artificial intelligence. These models have redefined the landscape by introducing novel approaches that significantly enhance natural language understanding. They train and infer more efficiently through parallelism and self-attention, improving efficiency and accuracy in natural language processing. Understanding their role in generative AI is crucial. They have shown significant performance gains in areas like medical problem summarization and clinical coding. By grasping how these models work, you can appreciate their transformative impact on AI technologies.

Understanding Transformer Models

Transformer models have revolutionized the field of artificial intelligence, offering a robust framework for processing and generating data. To fully appreciate their capabilities, you need to delve into their architecture and key features.

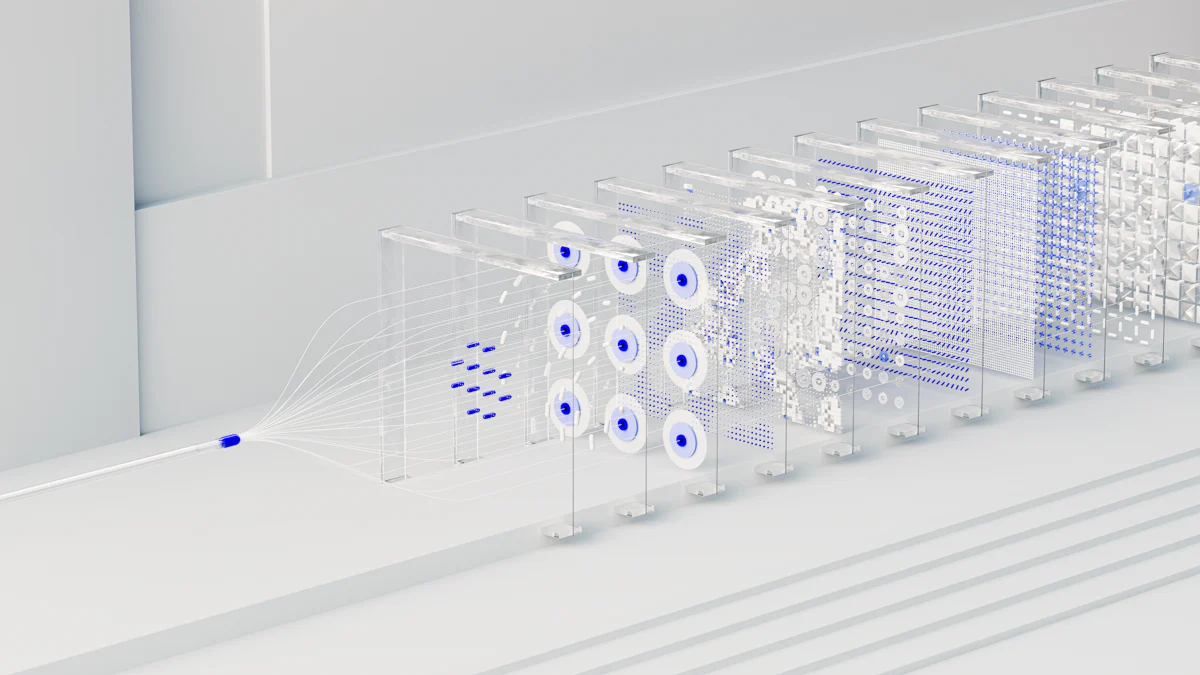

Architecture of Transformer Models

Self-Attention Mechanism

The self-attention mechanism stands at the core of transformer models. It allows the model to weigh the importance of different words in a sequence. By doing so, it captures long-range dependencies more effectively than traditional models. This mechanism processes all words simultaneously, enhancing the model's ability to understand context and relationships within the data. For instance, when generating text, the model can focus on relevant parts of the input, ensuring coherent and contextually appropriate outputs.

Encoder-Decoder Structure

The encoder-decoder structure is another vital component of transformer models. The encoder processes the input data, transforming it into a set of continuous representations. The decoder then uses these representations to generate the output. This structure is particularly beneficial in tasks like language translation, where understanding the entire input sequence is crucial for producing accurate translations. By leveraging this architecture, transformer models excel in complex language tasks, outperforming traditional models like RNNs.

Key Features of Transformer Models

Parallelization

One of the standout features of transformer models is their ability to parallelize computations. Unlike sequential models, transformers process entire sequences at once. This parallelization significantly speeds up training and inference, making them highly efficient. You can train these models on large datasets quickly, which is essential for applications requiring real-time processing, such as chatbots and virtual assistants.

Scalability

Scalability is another strength of transformer models. They can handle vast amounts of data without compromising performance. As you scale up the model size, you often see improvements in accuracy and generalization. This scalability opens up new possibilities in various domains, from healthcare diagnostics to financial forecasting. By scaling transformer models, researchers have explored novel applications, pushing the boundaries of what AI can achieve.

Scientific Research Findings: Studies have shown that transformer models have significantly advanced artificial intelligence and natural language processing, improving diagnostic accuracy and tailoring treatment plans in healthcare. However, there are concerns about bias and privacy risks.

How Transformer Models Function in Generative AI

Transformer models have become a cornerstone in generative AI, offering unparalleled capabilities in processing and generating data. To understand their function, you need to explore both the training and generation processes.

Training Process

Data Preprocessing

Before you train a transformer model, you must preprocess the data. This step involves cleaning and organizing the data to ensure it is suitable for training. You remove any irrelevant information and format the data consistently. This process helps the model learn more effectively by focusing on the essential patterns and structures within the data. Proper preprocessing is crucial because it directly impacts the model's ability to generate accurate and coherent outputs.

Model Training

Once you have preprocessed the data, you can begin training the transformer model. During this phase, the model learns to recognize patterns and relationships within the data. You feed the model large datasets, allowing it to analyze and understand complex sequences. The training process involves adjusting the model's parameters to minimize errors and improve performance. As a result, the model becomes adept at generating human-like text and other forms of data, showcasing the transformative impact of transformer models in AI.

Generation Process

Text Generation

In the generation process, transformer models excel at producing coherent and contextually relevant text. You can use these models to generate articles, stories, or even poetry. They analyze the input data and generate text that aligns with the given context. This capability has revolutionized natural language processing applications, enabling you to create content that closely resembles human writing. By leveraging transformer models, you can achieve unprecedented levels of precision and creativity in text generation tasks.

Image Generation

Transformer models also play a significant role in image generation. They can create realistic images by understanding and replicating patterns found in visual data. You provide the model with a dataset of images, and it learns to generate new images that reflect the characteristics of the input data. This application of transformer models has opened new avenues in fields like art and design, where you can explore innovative ways to create visual content. The ability to generate high-quality images demonstrates the versatility and power of transformer models in generative AI.

Scientific Research Findings: Studies have shown that transformer models have significantly advanced artificial intelligence and natural language processing, improving diagnostic accuracy and tailoring treatment plans in healthcare. However, there are concerns about bias and privacy risks.

Comparison with Other AI Models

When you explore the landscape of artificial intelligence, you'll find that Transformer Models stand out due to their unique capabilities. Let's delve into how they compare with other AI models like RNNs and CNNs.

Transformer vs. RNNs

Handling Long-Range Dependencies

Transformer Models excel in handling long-range dependencies, a task where RNNs often struggle. RNNs process data sequentially, which can limit their ability to capture relationships across distant tokens. In contrast, Transformer Models use self-attention mechanisms to weigh the importance of different words in a sequence simultaneously. This allows them to understand complex contextual relationships more effectively. As a result, you can rely on Transformer Models for tasks that require a deep understanding of context, such as language translation and text generation.

Computational Efficiency

When it comes to computational efficiency, Transformer Models have a clear advantage over RNNs. The parallelization capability of Transformer Models enables them to process entire sequences at once, significantly speeding up training and inference. You can train these models on large datasets in a fraction of the time it takes for RNNs. This efficiency makes Transformer Models ideal for rapid model development and deployment in real-time applications. By leveraging their computational power, you can achieve state-of-the-art results in various language modeling tasks.

Transformer vs. CNNs

Application Domains

While CNNs have traditionally dominated image processing tasks, Transformer Models are making significant inroads into this domain. You can use Transformer Models for tasks that require understanding complex patterns and relationships, such as image generation and classification. Their ability to capture long-range dependencies and contextual information makes them versatile across different application domains. Whether you're working on natural language processing or computer vision, Transformer Models offer a robust framework for tackling diverse challenges.

Performance Metrics

In terms of performance metrics, Transformer Models often outperform CNNs in tasks requiring a comprehensive understanding of data. Their scalability allows you to build models with more parameters, enhancing their power and generalizability. As you scale up Transformer Models, you often see improvements in accuracy and performance across various benchmarks. This scalability, combined with their ability to process data efficiently, positions Transformer Models as a formidable choice for AI applications that demand high precision and reliability.

Key Insight: Transformer Models have revolutionized AI by offering unparalleled capabilities in handling long-range dependencies and achieving computational efficiency. Their versatility across different application domains makes them a valuable asset in the AI toolkit.

Applications of Transformer Models in Generative AI

Transformer Models have become indispensable in the realm of generative AI, offering groundbreaking applications across various fields. Their ability to process and generate data efficiently has opened new avenues for innovation and creativity.

Natural Language Processing

In the field of Natural Language Processing (NLP), Transformer Models have revolutionized how you interact with language-based tasks. They provide robust solutions for complex challenges, enhancing both accuracy and efficiency.

Language Translation

Language translation has seen significant advancements thanks to Transformer Models. These models excel at understanding and translating languages by capturing the nuances and context of the source text. You can now translate documents and conversations with remarkable precision. This capability has transformed global communication, making it easier for you to connect with people across different languages.

Various Experts: "The introduction of the original Transformer Model in 2017 marked a pivotal moment in NLP history. This model laid the foundation for subsequent advancements in machine translation."

Sentiment Analysis

Sentiment analysis is another area where Transformer Models shine. They help you understand the emotions and opinions expressed in text data. By analyzing customer reviews, social media posts, and other textual content, these models provide valuable insights into public sentiment. This information can guide your business strategies and improve customer satisfaction.

Various Experts: "Transformers are proving their effectiveness across the NLP spectrum, from text summarization and sentiment analysis to question answering."

Creative Content Generation

Beyond language processing, Transformer Models have made significant strides in creative content generation. They empower you to explore new forms of artistic expression and storytelling.

Art and Music

In the world of art and music, Transformer Models enable you to create innovative compositions. By learning patterns from existing artworks and musical pieces, these models generate new creations that reflect the style and essence of the originals. This technology allows artists and musicians to push the boundaries of creativity, producing unique and captivating works.

Storytelling

Storytelling has also benefited from the capabilities of Transformer Models. You can use these models to generate engaging narratives and scripts. They assist in crafting stories that resonate with audiences, whether for entertainment, education, or marketing purposes. By leveraging the power of Transformer Models, you can enhance your storytelling skills and captivate your audience with compelling content.

Various Experts: "As knowledge workers, we predominantly engage with generative AI during the inference phase, whether it’s for making predictions, generating text, creating images, or other tasks."

Transformer Models continue to redefine the possibilities in generative AI, offering you tools to innovate and excel in various domains. Their applications in natural language processing and creative content generation demonstrate their versatility and transformative impact.

Future Implications and Developments

As you explore the future of Transformer Models, you will find exciting advancements and important ethical considerations. These models continue to evolve, offering new possibilities and challenges in the field of artificial intelligence.

Advancements in Transformer Models

Enhanced Architectures

You can expect significant enhancements in the architectures of Transformer Models. Researchers are constantly working on improving these models to make them more efficient and powerful. By refining the self-attention mechanisms and optimizing the encoder-decoder structures, you can achieve better performance in various applications. These enhancements will allow Transformer Models to process data more effectively, leading to improved accuracy and efficiency in tasks like natural language processing and image generation.

Scientific Research Findings: Transformer models have redefined the landscape by introducing novel approaches that significantly enhance natural language understanding. Addressing issues related to model interpretability, scalability concerns, and optimizing resource-efficient training methodologies are crucial areas requiring exploration.

Integration with Other Technologies

The integration of Transformer Models with other technologies is another promising area of development. By combining these models with advancements in machine learning and data processing, you can unlock new capabilities and applications. For example, integrating Transformer Models with cloud computing and edge devices can enable real-time processing and analysis of large datasets. This integration will open up new possibilities in fields like healthcare, finance, and entertainment, where you can leverage the power of Transformer Models to drive innovation and improve outcomes.

Ethical Considerations

As you embrace the advancements in Transformer Models, it is crucial to address the ethical considerations that come with their use. Ensuring fairness and responsibility in AI applications is essential to avoid potential biases and misuse.

Bias and Fairness

Bias and fairness are critical issues that you must consider when using Transformer Models. These models can inadvertently learn and perpetuate biases present in the training data. To mitigate this risk, you need to implement strategies that promote fairness and transparency. By carefully selecting and curating training datasets, you can reduce the likelihood of biased outputs. Additionally, ongoing monitoring and evaluation of model performance can help identify and address any biases that may arise.

Scientific Research Findings: Studies have shown that transformer models have significantly advanced artificial intelligence and natural language processing, improving diagnostic accuracy and tailoring treatment plans in healthcare. However, there are concerns about bias and privacy risks.

Responsible AI Use

Responsible AI use is another important consideration when working with Transformer Models. You must ensure that these models are used ethically and responsibly, with a focus on benefiting society. This involves setting clear guidelines and standards for AI development and deployment. By fostering a culture of responsibility and accountability, you can ensure that Transformer Models are used to enhance human capabilities and improve quality of life.

Key Insight: As knowledge workers, we predominantly engage with generative AI during the inference phase, whether it’s for making predictions, generating text, creating images, or other tasks.

By understanding the future implications and developments of Transformer Models, you can harness their potential while addressing the ethical challenges they present. This balanced approach will enable you to leverage the transformative power of these models responsibly and effectively.

Transformer models have reshaped the landscape of artificial intelligence. Their architecture, particularly the self-attention mechanism, has revolutionized how machines understand and generate human language. You see their impact across various applications, from text summarization to sentiment analysis. As these models continue to evolve, they promise even greater advancements. The future holds exciting possibilities for deeper integration of AI into everyday life. By embracing these innovations, you can anticipate a world where AI becomes an even more integral part of solving complex challenges and enhancing human capabilities.

See Also

Deciphering How Generative AI Models Learn

Insight into Generative AI Functionality