The Impact of Biased AI on Society

Biased AI refers to artificial intelligence systems that produce unfair outcomes due to prejudiced data or flawed algorithms. Understanding its impact on society is crucial. AI bias can lead to discrimination, affecting individuals' participation in the economy and society. It can also perpetuate existing societal biases, potentially reversing progress in equal rights. Areas such as employment, law enforcement, and healthcare often experience the negative effects of biased AI. A survey revealed that 81% of business leaders advocate for government regulation to address and prevent AI bias, highlighting the urgency of this issue.

Understanding Biased AI

Causes of Bias in AI

Data Collection and Representation

Biased AI often originates from the data it consumes. AI systems rely on vast datasets to learn and make decisions. However, these datasets frequently contain societal biases. For instance, if historical data reflects racial or gender disparities, AI models trained on such data may perpetuate these biases. This can lead to unfair treatment in areas like hiring, lending, and education. The data collection process itself can introduce bias. If the data lacks diversity or fails to represent all groups equally, the AI system may produce skewed outcomes. Researchers emphasize the importance of inclusive data collection to mitigate these issues.

Algorithmic Design and Development

The design and development of algorithms also play a crucial role in biased AI. Developers may unintentionally embed their own biases into algorithms. This can occur through the selection of features or the weighting of certain variables over others. Additionally, the lack of diverse perspectives in development teams can exacerbate this problem. Algorithms designed without considering potential biases can lead to systematic errors. These errors can have significant consequences, such as denying individuals access to essential services or opportunities. Ensuring diverse and inclusive development teams can help address these challenges.

Types of Bias in AI

Systemic Bias

Systemic bias in AI refers to the ingrained prejudices that reflect societal and historical inequalities. These biases often manifest in AI systems trained on data that mirrors existing disparities. For example, AI used in criminal justice may disproportionately target certain racial groups due to biased historical data. This type of bias can reinforce stereotypes and perpetuate discrimination on a large scale. Addressing systemic bias requires a comprehensive approach, including revising training datasets and implementing ethical guidelines in AI development.

Cognitive Bias

Cognitive bias in AI arises from the human-like decision-making processes embedded in algorithms. AI systems can mimic human cognitive biases, leading to flawed judgments. For instance, an AI model might favor candidates from prestigious universities due to a perceived correlation with success, ignoring other valuable attributes. Cognitive biases can result in unfair outcomes, particularly in recruitment and resource allocation. To combat this, developers must critically assess the decision-making criteria used in AI systems and strive for objectivity.

Areas Affected by Biased AI

Employment and Hiring Practices

Discrimination in Recruitment

AI systems in recruitment can perpetuate discrimination. They often rely on historical data that reflects societal biases. For instance, if past hiring favored certain demographics, AI may replicate this pattern. This results in unfair treatment of candidates from diverse backgrounds. Case Studies have shown companies facing backlash due to biased AI hiring practices. These examples highlight the need for transparency and fairness in AI-driven recruitment processes.

Impact on Workplace Diversity

Biased AI can negatively impact workplace diversity. When AI systems favor certain groups, they limit opportunities for others. This reduces the variety of perspectives and experiences within a company. A lack of diversity can stifle innovation and creativity. Organizations must ensure their AI tools promote inclusivity. Regular audits and diverse data sets can help achieve this goal.

Law Enforcement and Criminal Justice

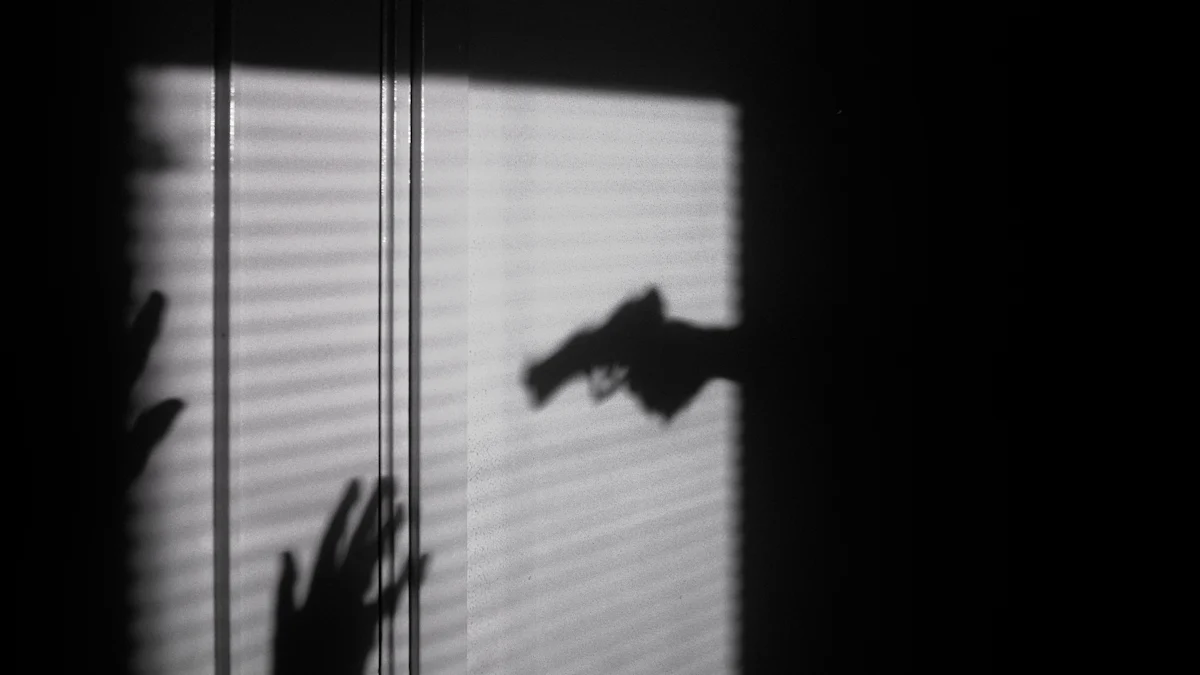

Racial Profiling and Surveillance

AI in law enforcement can lead to racial profiling. Algorithms trained on biased data may disproportionately target minority communities. This raises ethical concerns about surveillance technology. Critics argue that innocent individuals should not fear such systems. AI bias challenges this notion, highlighting the need for accountability and oversight in law enforcement applications.

Sentencing and Parole Decisions

AI tools influence sentencing and parole decisions. However, biased algorithms can result in unfair outcomes. They may recommend harsher sentences for certain racial or ethnic groups. This perpetuates existing inequalities in the justice system. Ensuring fairness requires transparent algorithms and regular evaluations. Stakeholders must prioritize ethical considerations in AI deployment.

Healthcare and Medical Decision-Making

Access to Treatment and Services

Biased AI affects access to healthcare services. Algorithms may prioritize certain patients over others based on flawed criteria. This can lead to disparities in treatment availability. Patients from marginalized communities often face barriers to care. Healthcare providers must address these biases to ensure equitable access for all individuals.

Health Disparities and Outcomes

AI bias contributes to health disparities. It can influence diagnostic and treatment decisions, leading to unequal outcomes. For example, AI systems may overlook symptoms prevalent in specific populations. This results in inadequate care and worsens health disparities. To combat this, healthcare organizations should use diverse data and involve experts in AI development.

Consequences of Biased AI on Society

Social Inequality and Discrimination

Reinforcement of Existing Prejudices

Biased AI systems can reinforce existing societal prejudices. These systems often rely on historical data that contains embedded biases. For example, AI algorithms trained on biased datasets may perpetuate stereotypes related to race, gender, or economic status. This can lead to unfair treatment in areas such as hiring, lending, and criminal justice. By amplifying these biases, AI systems can hinder progress toward equality and fairness.

"AI can perpetuate societal biases, leading to unfair treatment based on factors like race, gender, or economic status."

Marginalization of Vulnerable Groups

AI bias can marginalize vulnerable groups. When AI systems make decisions based on biased data, they may exclude or disadvantage certain populations. This can result in limited access to opportunities and resources for marginalized communities. For instance, biased algorithms in healthcare may prioritize certain patients over others, exacerbating health disparities. Addressing these biases is crucial to ensure that AI systems do not further marginalize already vulnerable groups.

Economic Implications

Job Displacement and Automation

The rise of AI technology has led to concerns about job displacement. Biased AI systems can exacerbate this issue by disproportionately affecting certain demographics. For example, automation may replace jobs traditionally held by minority groups, leading to increased unemployment and economic instability. It is essential to consider the impact of AI bias on employment and develop strategies to mitigate its effects.

Inequitable Access to Opportunities

Biased AI can create inequitable access to opportunities. Algorithms used in recruitment or education may favor certain groups, limiting opportunities for others. This can result in a lack of diversity in workplaces and educational institutions. Ensuring equitable access requires transparency in AI decision-making processes and regular audits to identify and address biases.

"Biased algorithms can promote discrimination or other forms of inaccurate decision-making that can cause systematic and potentially harmful errors."

Strategies to Mitigate Bias in AI

Improving Data Quality and Diversity

Inclusive Data Collection Practices

AI systems rely heavily on data. Ensuring this data is diverse and representative is crucial. Developers must adopt inclusive data collection practices. They should gather data from a wide range of sources and demographics. This approach helps capture the full spectrum of human experiences and reduces the risk of bias. By doing so, AI systems can make fairer decisions. "The best way to reduce bias in AI models is for both the people training the artificial intelligence and the people testing it to be mindful of any potential bias." This proactive approach ensures that AI systems do not perpetuate existing societal biases.

Regular Audits and Monitoring

Regular audits play a vital role in maintaining AI fairness. These audits involve examining AI systems for biases and inaccuracies. Developers should conduct these audits frequently. Monitoring helps identify potential issues early. By addressing these issues promptly, developers can prevent biased outcomes. Regular audits ensure that AI systems remain transparent and accountable. This practice builds trust in AI technologies and promotes ethical use.

Ethical AI Development and Governance

Transparent Algorithmic Processes

Transparency is key to ethical AI development. Developers must ensure that algorithmic processes are clear and understandable. This transparency allows stakeholders to detect and address biases. "Transparency ensures fair and ethical AI systems by detecting and addressing biases." When algorithms are transparent, users can trust the decisions made by AI systems. This trust is essential for widespread AI adoption. Transparent processes also encourage collaboration among developers, researchers, and policymakers.

Accountability and Regulation

Accountability is crucial in AI governance. Developers and organizations must take responsibility for their AI systems. They should implement regulations that promote ethical AI use. These regulations should focus on fairness, transparency, and accountability. By doing so, they can prevent discrimination and ensure equitable outcomes. Business owners and managers must take proactive steps to ensure responsible AI development and use. This approach fosters a culture of ethical AI practices and protects individuals from biased treatment.

Biased AI significantly impacts society by perpetuating discrimination and reinforcing existing inequalities. Addressing this bias is crucial to ensure fairness and equity in AI applications. Stakeholders must take proactive steps to mitigate these biases. They should prioritize inclusive data collection, transparent algorithmic processes, and regular audits. By doing so, they can reduce the risks associated with AI and unlock its full potential. Companies are motivated to tackle AI bias not only to achieve fairness but also to ensure better results. It is imperative for all involved to commit to ethical AI development and governance.

See Also

Examples of AI Reflecting Societal Biases

Ethics Concerns with AI-Generated Content