The Role of Attention Mechanisms in Transformer Models

Neural networks have transformed the landscape of artificial intelligence, evolving from simple perceptrons to complex architectures capable of remarkable feats. Among these advancements, Attention Mechanisms have emerged as a pivotal innovation, enabling models to focus on relevant parts of input data. This capability has significantly enhanced the performance of AI systems, particularly in natural language processing and computer vision. Transformer models, which leverage these mechanisms, have become foundational in AI, powering state-of-the-art applications and inspiring new research directions.

Understanding Attention Mechanisms

Definition and Purpose

Attention mechanisms have transformed the capabilities of deep learning models. They allow models to focus on the most relevant parts of input data, whether it be text or images. This focus enhances the model's ability to understand and process information effectively. In essence, attention mechanisms work by assigning different weights to different parts of the input data. These weights determine the importance of each part in the context of the task at hand.

How attention mechanisms work

Attention mechanisms operate by comparing elements within the input data. They calculate a score for each element, which reflects its relevance. This score influences how much attention the model pays to that element when making predictions. For instance, in language models, attention mechanisms help identify which words in a sentence are most important for understanding the meaning.

Benefits of using attention mechanisms

The benefits of using attention mechanisms are numerous. They improve the model's ability to capture dependencies between distant elements in the input data. This capability is crucial for tasks like language translation, where the relationship between words can span across long sentences. Additionally, attention mechanisms enhance the model's performance by allowing it to focus computational resources on the most critical parts of the input, leading to more efficient processing.

Types of Attention Mechanisms

Attention mechanisms come in various forms, each serving a unique purpose in model architecture. The two primary types are self-attention and cross-attention.

Self-attention

Self-attention, also known as scaled dot-product attention, enables a model to weigh the importance of different tokens within the same sequence. This mechanism allows the model to consider the entire sequence when processing each token, capturing dependencies effectively. Self-attention has been pivotal in the success of transformer models, such as BERT and GPT, which excel in understanding and generating human-like text.

Cross-attention

Cross-attention, on the other hand, involves interactions between different sequences. It is commonly used in tasks where the model needs to align information from two separate inputs, such as in machine translation. In this context, cross-attention helps the model focus on relevant parts of the source sentence while generating the target sentence.

Scientific Research Findings: According to a study published in ScienceDirect, attention mechanisms have revolutionized modern deep learning models, leading to powerful large language models like GPT. Another study from Medium highlights the transformative application of attention mechanisms in NLP, enabling models like BERT and T5 to understand and generate human-like text.

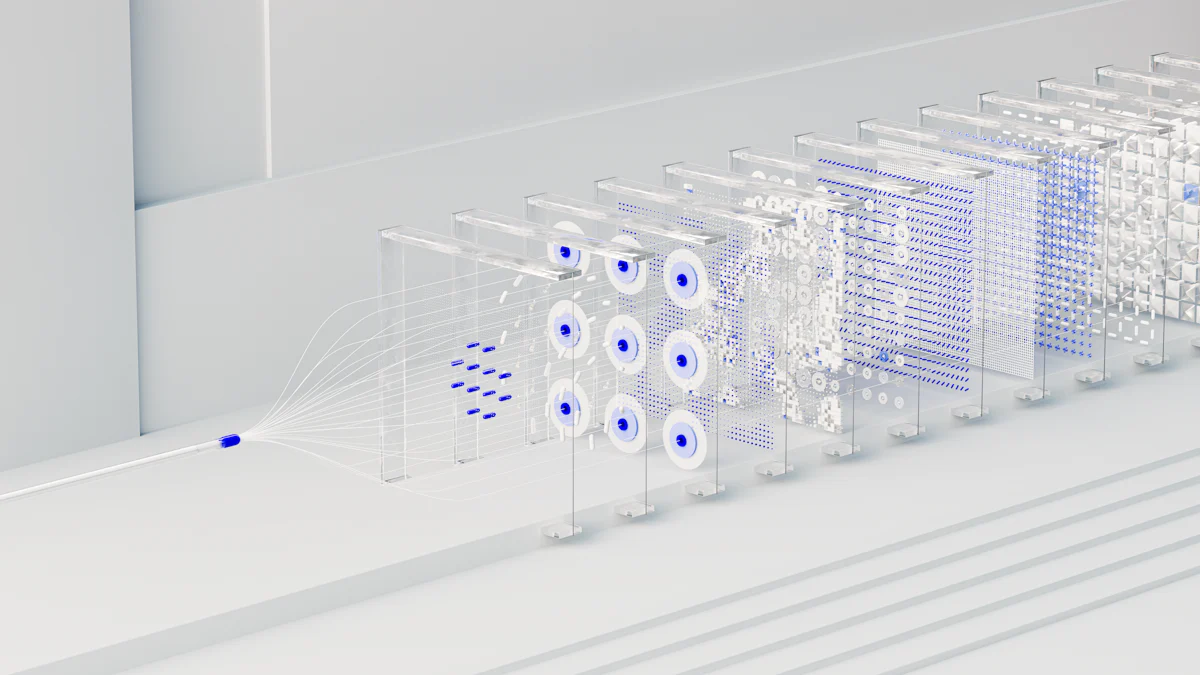

The Architecture of Transformer Models

Key Components

Transformer models have revolutionized AI by introducing a novel architecture that relies heavily on attention mechanisms. This architecture consists of several key components that work together to process and generate data efficiently.

Encoder and Decoder

The encoder and decoder form the backbone of transformer models. The encoder processes the input data, transforming it into a set of continuous representations. The decoder then takes these representations and generates the output data. Each encoder and decoder layer contains multiple attention heads, which allow the model to focus on different parts of the input sequence simultaneously. This parallel processing capability distinguishes transformers from traditional models like RNNs, which process data sequentially.

Positional Encoding

Transformers lack the inherent sequential nature of RNNs, so they require a method to understand the order of input data. Positional encoding addresses this need by adding unique positional information to each input token. This encoding helps the model differentiate between tokens based on their position in the sequence. By incorporating positional encoding, transformers can capture the order of words in a sentence, which is crucial for understanding context and meaning.

Role of Attention in Transformers

Attention mechanisms play a pivotal role in the success of transformer models. They enable the model to weigh the importance of different parts of the input data, leading to improved performance and efficiency.

Enhancing Model Performance

Attention mechanisms enhance model performance by allowing transformers to focus on the most relevant parts of the input data. This focus leads to more accurate predictions and better handling of complex tasks. According to a study published in Forbes, the introduction of transformers marked a significant shift in AI, as researchers moved away from RNNs and relied entirely on attention for language modeling. This shift has resulted in state-of-the-art performance in various applications, including natural language processing and computer vision.

Capturing Long-Range Dependencies

One of the most significant advantages of attention mechanisms is their ability to capture long-range dependencies within data. Traditional models often struggle with this task, especially when dealing with lengthy sequences. However, transformers excel in this area due to their attention-based architecture. As highlighted in a study on the importance of attention modules, transformers effectively learn long-distance relationships, similar to how humans process natural text. This capability allows transformers to understand context and meaning across entire sequences, making them highly effective for tasks like language translation and text summarization.

Self-Attention in Detail

Mechanism and Calculation

Self-attention, a core component of transformer models, allows the model to weigh the importance of different tokens within a sequence. This mechanism enables the model to focus on relevant parts of the input, enhancing its ability to understand complex relationships.

Query, Key, and Value vectors

In self-attention, each token in the input sequence is transformed into three vectors: Query, Key, and Value. These vectors play distinct roles:

Query: Represents the token for which attention is being calculated.

Key: Represents the tokens that the query token will attend to.

Value: Represents the actual information that will be used in the output.

The model calculates attention scores by comparing the query vector with all key vectors. This comparison determines how much focus the model should place on each token.

Scaled Dot-Product Attention

The scaled dot-product attention mechanism computes attention scores using the dot product of the query and key vectors. The model scales these scores by dividing them by the square root of the dimension of the key vectors. This scaling helps stabilize gradients during training. The model then applies a softmax function to convert these scores into probabilities, which determine the weight of each value vector in the final output. This process allows the model to focus on the most relevant tokens, improving its ability to capture dependencies within the sequence.

Multi-Head Attention

Multi-head attention extends the concept of self-attention by allowing the model to focus on different parts of the sequence simultaneously. This approach enhances the model's ability to capture diverse relationships within the data.

Concept and advantages

Multi-head attention involves multiple attention heads, each operating independently. Each head processes the input sequence using its own set of query, key, and value vectors. This parallel processing enables the model to capture various aspects of the data, such as syntactic and semantic relationships. By combining the outputs of all attention heads, the model gains a richer understanding of the input sequence.

Scientific Research Findings: According to a study on the Efficiency of Self-Attention in Transformers, multi-head attention allows models to focus on relevant information and make better predictions, especially for long text sequences.

Implementation in transformers

In transformer models, multi-head attention is implemented by concatenating the outputs of all attention heads and passing them through a linear transformation. This process integrates the diverse information captured by each head, resulting in a comprehensive representation of the input sequence. Multi-head attention has proven essential in the success of transformer models, enabling them to achieve state-of-the-art performance in tasks like language translation and text summarization.

Applications of Transformer Models

Natural Language Processing

Transformer models have revolutionized the field of Natural Language Processing (NLP). They excel in tasks that require understanding and generating human language.

Machine Translation

In machine translation, transformers have set new benchmarks. They translate text from one language to another with remarkable accuracy. By leveraging attention mechanisms, these models focus on relevant parts of the source sentence. This focus ensures that translations maintain context and meaning. For example, Google's Neural Machine Translation system uses transformers to provide high-quality translations across numerous languages.

Text Summarization

Text summarization benefits significantly from transformer models. They condense lengthy documents into concise summaries while preserving essential information. The attention mechanism allows the model to identify key points in the text. This capability makes transformers ideal for summarizing news articles, research papers, and other extensive content. Models like BERT and T5 have demonstrated exceptional performance in this area.

Beyond NLP

Transformers extend their capabilities beyond NLP, impacting various domains with their versatile architecture.

Image Processing

In image processing, transformers have shown promise in tasks like image classification and object detection. They analyze visual data by focusing on different parts of an image, similar to how they process text. This approach enhances the model's ability to recognize patterns and features. Vision Transformers (ViTs) have emerged as a powerful tool in this field, offering competitive performance compared to traditional convolutional neural networks.

Speech Recognition

Speech recognition has also seen advancements with transformer models. They convert spoken language into text by analyzing audio signals. The attention mechanism helps the model focus on important parts of the audio, improving transcription accuracy. This technology finds applications in virtual assistants, transcription services, and accessibility tools. As transformers continue to evolve, they hold the potential to further enhance speech recognition systems.

Emerging Trends: As transformers become integral to high-stakes applications like healthcare and finance, the need for explainability and interpretability grows. These models must ensure trust and accountability, especially when decisions impact critical areas. Researchers are actively exploring ways to make transformers more transparent and understandable, paving the way for their broader adoption in sensitive domains.

Advantages of Attention Mechanisms

Attention mechanisms have revolutionized the efficiency and accuracy of deep learning models. By allowing models to focus on relevant parts of input data, these mechanisms enhance both training speed and prediction quality.

Improved Efficiency

Attention mechanisms significantly boost the efficiency of neural networks. They enable models to allocate resources more effectively, leading to faster training times and reduced computational costs.

Faster training times

Models equipped with attention mechanisms train more quickly. They prioritize essential data, minimizing the time spent processing irrelevant information. This selective focus mirrors human cognitive processes, where individuals concentrate on necessary details while ignoring distractions. As a result, models achieve their learning objectives in less time, accelerating the development of AI applications.

Reduced computational cost

Attention mechanisms also lower computational expenses. By focusing on critical data points, models require fewer resources to achieve high performance. This efficiency reduces the need for extensive computational power, making advanced AI technologies more accessible and sustainable. Consequently, organizations can deploy powerful models without incurring prohibitive costs.

Enhanced Accuracy

In addition to improving efficiency, attention mechanisms enhance the accuracy of AI models. They enable better handling of context and improve the quality of predictions.

Better handling of context

Models with attention mechanisms excel at understanding context. They weigh the importance of different input elements, capturing intricate relationships within data. This capability is crucial for tasks like language translation, where context determines meaning. By accurately interpreting context, models produce more reliable and coherent outputs.

Improved prediction quality

Attention mechanisms elevate prediction quality by refining the model's focus. They ensure that predictions are based on the most pertinent information, reducing errors and increasing reliability. This precision is vital in applications such as medical diagnosis and financial forecasting, where accurate predictions have significant implications.

Scientific Insight: Attention mechanisms mimic human cognitive strategies, selectively concentrating on necessary information. This approach enhances both the efficiency and accuracy of perceptual information processing, making it a cornerstone of modern AI advancements.

Challenges and Limitations

Computational Complexity

Transformer models, while powerful, demand significant computational resources. Their architecture, which relies heavily on attention mechanisms, requires substantial memory and processing power. This need for resources can limit their deployment in environments with constrained computational capabilities.

Resource requirements

The resource-intensive nature of transformers stems from their reliance on multiple layers of attention mechanisms. Each layer processes vast amounts of data simultaneously, which increases the demand for memory and computational power. High-performance hardware, such as GPUs or TPUs, often becomes necessary to train and deploy these models effectively. This requirement can pose challenges for smaller organizations or individuals with limited access to such resources.

Scalability issues

Scalability presents another challenge for transformer models. As the size of the input data grows, the computational demands increase exponentially. This growth can lead to bottlenecks, especially when processing long sequences or large datasets. Researchers continue to explore methods to improve the scalability of transformers, aiming to make them more efficient and accessible for a wider range of applications.

Interpretability

Understanding how transformer models make decisions remains a significant challenge. These models operate as 'black boxes,' with complex internal workings that are difficult to interpret. This lack of transparency can hinder trust and adoption in critical applications.

Understanding model decisions

Interpreting the decisions made by transformer models poses a challenge due to their intricate architecture. The attention mechanisms, while powerful, add layers of complexity that obscure the decision-making process. Researchers are actively developing tools and techniques to visualize and interpret attention patterns, aiming to shed light on how these models arrive at their conclusions.

Scientific Research Findings: According to a study published in Forbes, transformers' lack of explainability and interpretability challenges their use in sensitive domains. Efforts to improve understanding focus on visualizing attention mechanisms and developing interpretative tools.

Addressing black-box nature

Addressing the black-box nature of transformers involves ongoing research efforts. Scientists are exploring ways to enhance the explainability of these models, making them more transparent and understandable. By improving interpretability, researchers hope to increase trust in transformer models, particularly in high-stakes applications like healthcare and finance.

Emerging Trends: Research trends, as highlighted in Medium, focus on attention visualization and developing tools for interpreting attention patterns. These efforts aim to demystify the inner workings of transformer models, paving the way for broader adoption and trust in their capabilities.

Future Directions

Innovations in Attention Mechanisms

Adaptive attention

Adaptive attention represents a significant advancement in the field of transformer models. This mechanism allows models to dynamically adjust their focus based on the input data's complexity and relevance. By doing so, adaptive attention optimizes computational resources, ensuring that the model allocates more attention to critical parts of the data while minimizing focus on less important elements. This adaptability enhances the model's efficiency and accuracy, making it more effective in handling diverse tasks.

Sparse attention

Sparse attention emerges as another promising innovation. Unlike traditional dense attention mechanisms, sparse attention selectively attends to a subset of input tokens. This selective focus reduces computational overhead, making models more scalable and efficient. Sparse attention proves particularly beneficial when dealing with long sequences, as it mitigates the exponential growth in computational demands. Researchers continue to explore ways to implement sparse attention effectively, aiming to balance performance and resource utilization.

Evolving Transformer Architectures

Transformer variants

The evolution of transformer architectures has led to the development of various transformer variants. These variants aim to address specific challenges and enhance model performance across different applications. For instance, some variants focus on improving scalability by reducing the quadratic scaling of attention mechanisms. Others emphasize enhancing interpretability, making models more transparent and understandable. As researchers continue to innovate, these transformer variants hold the potential to revolutionize AI applications further.

Integration with other models

Integrating transformers with other models represents a promising direction for future research. By combining the strengths of transformers with other neural network architectures, researchers can create hybrid models that leverage the best of both worlds. This integration can lead to improved performance in complex tasks, such as multi-modal learning, where models process data from multiple sources simultaneously. As the field progresses, these integrated models may unlock new possibilities and applications in AI.

Scientific Research Findings: According to a study published in Medium, new trends and research directions are shaping the future of transformers, focusing on innovations like adaptive and sparse attention. Another study from Forbes highlights efforts to create transformer variants with sub-quadratic scaling, addressing scalability challenges.

Attention mechanisms have become a cornerstone in the evolution of deep learning models. They enable models to focus on the most relevant parts of input data, enhancing both efficiency and accuracy. This capability is crucial for tasks like natural language processing and computer vision, where capturing long-range dependencies is essential. As AI continues to evolve, attention mechanisms will likely play a pivotal role in integrating multimodal data, allowing models to process information across different domains simultaneously. The transformative impact of transformers, powered by attention, promises exciting advancements in AI, paving the way for innovative applications and solutions.

See Also

Insight into Transformer Model Operation in Generative AI

Comprehending the Training Journey of Generative AI Models

The Mechanics of Generative AI: A Mathematical View

The Function of Neural Networks in Generative AI

Unraveling the Dynamics of Neural Network Layers in Generative AI