The Role of Clustering in Data Preparation for Generative AI

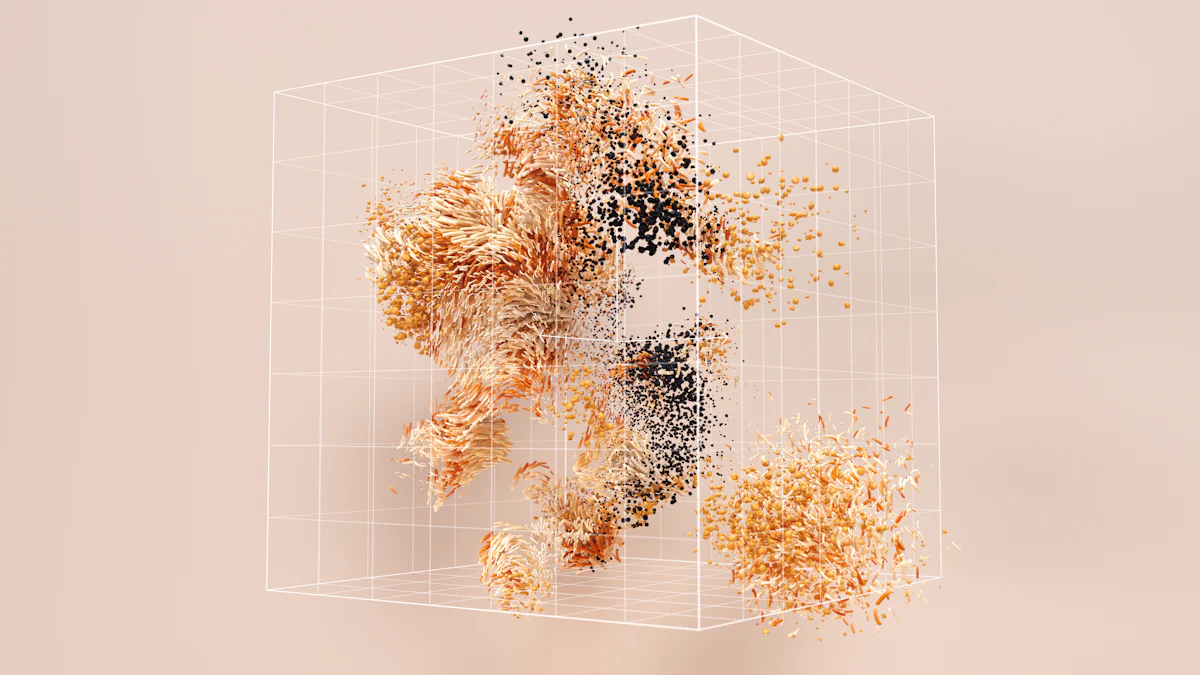

Clustering in data preparation plays a pivotal role in data science. It involves grouping similar data points to uncover patterns and relationships within datasets. This technique enhances understanding and provides insights into complex data structures. Generative AI, which relies heavily on quality data, benefits significantly from clustering. By organizing data into meaningful clusters, AI models can learn more effectively, leading to improved performance. Proper data preparation, including clustering, is crucial for the success of AI models. It ensures that the data fed into these models is clean, structured, and ready for analysis, ultimately enhancing the accuracy and reliability of AI-generated outputs.

Understanding Clustering

What is Clustering?

Definition and basic principles

Clustering involves grouping similar data points based on specific criteria. This technique helps in identifying patterns and relationships within datasets. By organizing data into clusters, researchers can simplify complex data structures, making them easier to analyze and understand. Clustering operates on the principle of similarity, where data points within a cluster share common characteristics, while those in different clusters exhibit distinct features.

Types of clustering methods

Several clustering methods exist, each with unique characteristics. The most common types include:

K-Means Clustering: This method partitions data into K clusters, where each data point belongs to the cluster with the nearest mean. It is efficient for large datasets but requires specifying the number of clusters beforehand.

Hierarchical Clustering: This approach builds a tree-like structure of clusters, either by merging smaller clusters into larger ones (agglomerative) or by splitting larger clusters into smaller ones (divisive). It provides a visual representation of data relationships but can be computationally intensive.

DBSCAN (Density-Based Spatial Clustering of Applications with Noise): This method groups data points based on density, identifying clusters of varying shapes and sizes. It is effective for datasets with noise and outliers but may struggle with varying density levels.

Importance of Clustering in Data Science

Role in data analysis

Clustering plays a crucial role in data analysis by enabling researchers to explore and understand data distributions. It allows data scientists to observe how data points are grouped, leading to valuable insights. For instance, a study on AI Clustering in Prevention Research demonstrated the accuracy of clustering methods in assigning individuals into known cluster solutions using binary data. This highlights clustering's effectiveness in handling complex datasets.

Benefits for AI applications

Clustering offers significant benefits for AI applications. It enhances data preparation by organizing data into meaningful clusters, which improves the learning process of AI models. By focusing on relevant features, clustering aids in dimensionality reduction, making data more manageable for generative AI models. Additionally, clustering facilitates anomaly detection, crucial for identifying outliers that AI models need to learn from. A review on Data Clustering and Cluster Validation explained the mathematical operation of internal and external cluster validity indices, emphasizing the importance of validating clustering results to ensure accuracy and reliability in AI applications.

Types of Clustering Algorithms

K-Means Clustering

Process and characteristics

K-Means Clustering stands as one of the most popular clustering algorithms. It partitions data into a predefined number of clusters, denoted as K. The algorithm assigns each data point to the cluster with the nearest mean, known as the centroid. This iterative process continues until the centroids stabilize. K-Means is efficient for large datasets and provides clear, distinct clusters.

Advantages and limitations

K-Means offers several advantages. It is computationally efficient and easy to implement. The algorithm works well with large datasets and provides clear, distinct clusters. However, it requires the number of clusters to be specified beforehand, which can be challenging without prior knowledge. Additionally, K-Means struggles with non-spherical clusters and varying cluster sizes.

Hierarchical Clustering

Process and characteristics

Hierarchical Clustering builds a tree-like structure of clusters, known as a dendrogram. This method can be agglomerative, where smaller clusters merge into larger ones, or divisive, where larger clusters split into smaller ones. Hierarchical Clustering does not require a predefined number of clusters, offering flexibility in data analysis.

Advantages and limitations

Hierarchical Clustering provides a visual representation of data relationships, making it easier to interpret complex datasets. It does not require specifying the number of clusters beforehand. However, it can be computationally intensive, especially with large datasets. The method may also struggle with noise and outliers, affecting the accuracy of the clustering.

DBSCAN Clustering

Process and characteristics

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) groups data points based on density. It identifies clusters of varying shapes and sizes by locating regions of high density separated by regions of low density. DBSCAN effectively handles noise and outliers, making it suitable for complex datasets.

Advantages and limitations

DBSCAN excels in identifying clusters of arbitrary shapes and sizes. It does not require specifying the number of clusters beforehand and effectively handles noise and outliers. However, DBSCAN may struggle with datasets of varying density levels. The choice of parameters, such as the neighborhood radius, can significantly impact the clustering results.

Case Studies:

Comparison of Clustering Methods with Binary Data: Researchers observed similar levels of accuracy with binary data across different clustering methods. Hierarchical Clustering produced interpretable solutions even with very small samples.

Application of Clustering Methods in Prevention Research: Clustering methods accurately assigned individuals into known cluster solutions using binary data, demonstrating their effectiveness in handling complex datasets.

Clustering in Data Preparation

Clustering in Data Preparation plays a crucial role in refining datasets for generative AI. By organizing data into meaningful groups, clustering enhances the quality and usability of data, which is essential for AI models to perform effectively. This section explores how clustering aids in data cleaning and transformation, two vital steps in preparing data for AI applications.

Data Cleaning

Data cleaning is a fundamental step in Clustering in Data Preparation. It involves identifying and handling outliers and dealing with missing data to ensure the dataset's integrity.

Identifying and handling outliers

Outliers can significantly skew the results of data analysis. Clustering helps in detecting these anomalies by grouping similar data points together. When a data point does not fit well into any cluster, it may indicate an outlier. By identifying these outliers, data scientists can decide whether to remove them or adjust their values to maintain data consistency. For instance, DBSCAN Clustering excels in handling noise and outliers, making it a preferred choice for datasets with irregularities.

Dealing with missing data

Missing data poses challenges in data analysis. Clustering assists in addressing this issue by grouping data points with similar characteristics. Once clusters are formed, data scientists can estimate missing values based on the characteristics of the cluster to which the incomplete data point belongs. This approach ensures that the dataset remains robust and reliable for AI model training.

Data Transformation

Data transformation is another critical aspect of Clustering in Data Preparation. It involves normalizing and standardizing data, as well as selecting and extracting relevant features.

Normalization and standardization

Normalization and standardization are essential for ensuring that data features are on the same scale. Clustering requires calculating the similarity between data points, which can be skewed if features have different scales. By normalizing and standardizing data, clustering algorithms can more accurately group similar data points, leading to more meaningful clusters. K-Means Clustering, for example, benefits from standardized data as it relies on distance calculations to form clusters.

Feature selection and extraction

Feature selection and extraction simplify complex datasets by focusing on the most relevant data attributes. Clustering aids in this process by highlighting which features contribute most to the formation of clusters. By reducing the dimensionality of the data, clustering makes it more manageable for generative AI models. This process not only improves model efficiency but also enhances the interpretability of the results.

Comparative Data:

Hierarchical Clustering and K-Means Clustering show similar accuracy levels with binary data. Hierarchical Clustering performs well even with dichotomous data, contrary to some beliefs. However, K-Means may not perform optimally with small samples, particularly those smaller than N=50.

Practical Applications of Clustering in Generative AI

Image Generation

Clustering for Image Segmentation

Clustering plays a vital role in image segmentation. It groups pixels with similar characteristics, allowing for the division of an image into meaningful segments. This process simplifies complex images, making them easier to analyze and interpret. By identifying patterns within the image data, clustering enhances the ability of generative AI models to understand and recreate visual content. For instance, when applied to medical imaging, clustering can help in segmenting different tissues, aiding in accurate diagnosis.

Enhancing Image Quality

Clustering also contributes to enhancing image quality. By grouping similar pixel values, it helps in noise reduction and improves the clarity of images. This process allows generative AI models to produce high-quality images with better resolution and detail. Clustering techniques can identify and correct anomalies in image data, ensuring that the generated images are both realistic and visually appealing. This capability is particularly beneficial in fields like digital art and virtual reality, where image quality is paramount.

Text Generation

Clustering for Topic Modeling

In text generation, clustering aids in topic modeling by grouping similar words or phrases. This technique helps in identifying the underlying themes within a text corpus. By organizing text data into clusters, generative AI models can better understand the context and generate coherent and relevant content. Clustering for topic modeling proves invaluable in applications like content recommendation systems and automated summarization, where understanding the main topics is crucial.

Improving Text Coherence

Clustering enhances text coherence by ensuring that generated content follows a logical flow. By analyzing the relationships between words and phrases, clustering helps in maintaining consistency throughout the text. This process allows generative AI models to produce text that reads naturally and makes sense to the reader. Improved text coherence is essential in applications such as chatbots and virtual assistants, where clear and understandable communication is key.

Key Insight: Clustering in generative AI not only simplifies complex data but also enhances the quality and coherence of generated outputs. By grouping similar data points, clustering reveals patterns and relationships that improve the performance of AI models.

Validation of Clustering Results

Evaluating Clustering Performance

Evaluating the performance of clustering algorithms is crucial to ensure their effectiveness in data preparation for generative AI. Researchers use various validation measures to assess clustering quality.

Internal validation measures

Internal validation measures focus on the data itself to evaluate clustering performance. These measures assess how well the data points are grouped within clusters. A common approach involves analyzing intracluster distances, which measure the compactness of clusters. Lower intracluster distances indicate that data points within a cluster are closely packed, suggesting a high-quality clustering result. Another important aspect is intercluster distances, which evaluate the separation between different clusters. Greater intercluster distances imply distinct and well-separated clusters. By examining both intracluster and intercluster distances, researchers can gain insights into the clustering quality.

External validation measures

External validation measures compare the clustering results to a predefined structure or ground truth. These measures provide an objective assessment of clustering accuracy. One widely used external validation measure is the Rand Index, which evaluates the agreement between the clustering results and the true classification. A higher Rand Index indicates better clustering performance. Another measure, the Adjusted Rand Index, accounts for chance agreements, providing a more accurate evaluation. By utilizing external validation measures, researchers can determine how well the clustering algorithm aligns with the expected outcomes.

Improving Clustering Outcomes

To enhance clustering outcomes, researchers can employ various strategies that optimize algorithm performance.

Parameter tuning

Parameter tuning involves adjusting the parameters of clustering algorithms to achieve optimal results. For instance, in K-Means Clustering, selecting the appropriate number of clusters (K) is crucial. Researchers can experiment with different values of K to identify the configuration that yields the best clustering performance. Similarly, in DBSCAN Clustering, tuning parameters such as the neighborhood radius can significantly impact the clustering results. By fine-tuning these parameters, researchers can improve the accuracy and reliability of clustering outcomes.

Algorithm selection

Choosing the right clustering algorithm is essential for achieving desired results. Different algorithms have unique characteristics and are suited for specific types of data. For example, Hierarchical Clustering is ideal for datasets with a hierarchical structure, while DBSCAN excels in handling noise and outliers. Researchers should consider the nature of the data and the specific requirements of the generative AI application when selecting a clustering algorithm. By aligning the algorithm choice with the data characteristics, researchers can enhance the effectiveness of clustering in data preparation.

Scientific Research Findings:

A study on Evaluation of Clustering Validation Metrics and Performance on Common Algorithms highlights the importance of selecting appropriate validation measures for clustering evaluation.

Another study, Accuracy Assessment of Clustering Methods and Factors Influencing Performance, emphasizes the impact of sample size and other factors on clustering accuracy.

The research on Evaluation of Clustering Quality with Intracluster and Intercluster Distances underscores the significance of assessing both intracluster and intercluster distances to evaluate clustering quality.

Challenges in Clustering for Generative AI

Scalability Issues

Handling large datasets

Clustering large datasets presents significant challenges. As data volume increases, the complexity of clustering algorithms also rises. Researchers must efficiently manage these vast datasets to ensure accurate clustering results. Historical studies have shown that different clustering methods can accurately assign individuals into known cluster solutions, even with small samples using binary data. However, handling large datasets requires more sophisticated techniques and computational resources.

Computational complexity

The computational complexity of clustering algorithms can hinder their application in generative AI. Algorithms like hierarchical clustering, while effective, often struggle with large datasets due to their intensive computational requirements. Despite this, hierarchical clustering has consistently produced usable solutions with smaller samples. Researchers must balance the need for detailed clustering with the available computational power to optimize performance.

Data Quality Concerns

Impact of noisy data

Noisy data can significantly affect the quality of clustering results. Clustering algorithms may misinterpret noise as meaningful patterns, leading to inaccurate groupings. This issue becomes more pronounced in generative AI, where the quality of input data directly impacts model performance. Researchers must implement strategies to identify and mitigate noise within datasets to maintain clustering accuracy.

Ensuring data diversity

Ensuring data diversity is crucial for effective clustering. Diverse datasets provide a comprehensive view of the data landscape, allowing clustering algorithms to identify distinct patterns and relationships. Without sufficient diversity, clustering results may lack generalizability, limiting their applicability in generative AI. Researchers should strive to include varied data sources to enhance the robustness and reliability of clustering outcomes.

Future Trends in Clustering and Generative AI

Advances in Clustering Techniques

Emerging algorithms

Clustering techniques continue to evolve, with new algorithms emerging to address the limitations of traditional methods. Researchers develop innovative approaches that enhance clustering accuracy and efficiency. These emerging algorithms focus on handling large datasets, improving computational speed, and increasing robustness against noise. By leveraging advanced mathematical models and computational techniques, these algorithms offer more precise clustering results, which are crucial for generative AI applications.

Integration with deep learning

The integration of clustering with deep learning represents a significant advancement in the field. Deep learning models, known for their ability to learn complex patterns, can benefit from clustering techniques that simplify data structures. By combining these two approaches, researchers can enhance the performance of generative AI models. Clustering helps in feature extraction and dimensionality reduction, allowing deep learning models to focus on the most relevant data attributes. This integration leads to more accurate and efficient AI models capable of generating high-quality outputs.

Implications for Generative AI

Enhanced model capabilities

The advancements in clustering techniques have profound implications for generative AI. Enhanced clustering algorithms improve the quality of data preparation, leading to more accurate AI models. By organizing data into meaningful clusters, generative AI models can learn more effectively, resulting in improved performance. These models can generate more realistic and coherent outputs, whether in image, text, or other forms of content. The ability to handle complex datasets with precision allows generative AI to tackle more challenging tasks and produce superior results.

New applications and opportunities

The future of clustering in generative AI holds exciting possibilities for new applications and opportunities. As clustering techniques advance, they enable AI models to explore uncharted territories and address novel challenges. For instance, by analyzing historical data and clustering it based on relevant variables, AI can predict customer preferences, demand patterns, and market shifts. This capability opens doors to innovative applications in fields such as marketing, healthcare, and finance. The continuous evolution of clustering techniques will drive the expansion of generative AI into new domains, offering unprecedented opportunities for growth and innovation.

Clustering plays a crucial role in data preparation for generative AI. It organizes vast datasets into meaningful groups, revealing patterns and relationships. This process enhances AI models' learning capabilities, leading to improved performance. Effective clustering allows AI to make informed decisions and drive innovation by providing structured insights. As technology evolves, clustering techniques will continue to advance, offering new opportunities for AI applications. The future holds exciting potential for clustering in AI, promising enhanced model capabilities and novel applications across various fields.

See Also

Exploring Neural Networks in Generative Artificial Intelligence

Insight into Deep Learning for Generative Artificial Intelligence

Decoding the Essence of Generative Artificial Intelligence

Unraveling the Learning Journey of Generative AI Models

The Mechanics of Generative Artificial Intelligence: A Mathematical View