Understanding Backpropagation in Deep Learning for Generative AI

Backpropagation in Deep Learning serves as the backbone of neural networks, allowing them to learn and enhance their performance. This supervised learning algorithm is crucial for training artificial neural networks by optimizing their weights through gradient descent. You will find backpropagation indispensable in generative AI, where it enables models to create new content by efficiently computing gradients. Its versatility and efficiency make it a popular choice in machine learning, driving advancements in artificial intelligence. Understanding this concept is essential for anyone delving into the world of neural networks and AI.

Basics of Neural Networks

Understanding neural networks begins with grasping their basic structure and how they function. These networks aim to mimic the human brain's structure, allowing machines to learn and make predictions.

Structure of Neural Networks

Neurons and Layers

Neural networks consist of interconnected units called neurons. Each neuron receives input, processes it, and passes it on to the next layer. You can think of these layers as the building blocks of the network. The input layer receives data, the hidden layers process it, and the output layer delivers the final result. This layered structure allows the network to learn complex patterns and relationships within the data.

Activation Functions

Activation functions play a crucial role in neural networks. They determine whether a neuron should be activated or not, based on the input it receives. Common activation functions include the sigmoid, tanh, and ReLU (Rectified Linear Unit). Each function has its unique properties and applications. For instance, ReLU is popular due to its simplicity and efficiency in handling large datasets.

Training Neural Networks

Training a neural network involves teaching it to make accurate predictions by adjusting its parameters.

Forward Propagation

In forward propagation, data moves through the network from the input layer to the output layer. Each neuron processes the input and passes it to the next layer. This process continues until the network produces an output. Forward propagation helps the network understand the relationship between input data and the desired output.

Loss Functions

Loss functions measure the difference between the network's predictions and the actual outcomes. They provide a way to quantify how well the network is performing. By minimizing the loss, you can improve the network's accuracy. Common loss functions include mean squared error and cross-entropy loss. These functions guide the network in adjusting its weights and biases to reduce errors and enhance performance.

Scientific Research Findings: According to a study published in LinkedIn, backpropagation plays a vital role in reducing errors and improving outcomes in neural networks. This process involves calculating weights and biases to achieve better predictions, as highlighted by IBM Think.

By understanding these fundamental concepts, you can appreciate how neural networks function and their potential in various applications.

Mechanics of Backpropagation

Understanding the mechanics of backpropagation is essential for grasping how neural networks learn and improve. This process involves a series of steps that adjust the network's parameters to minimize errors and enhance performance.

The Backpropagation Algorithm

The backpropagation algorithm is a cornerstone of neural network training. It systematically updates the weights of the network to reduce the error in predictions.

Chain Rule in Calculus

The chain rule in calculus plays a pivotal role in backpropagation. It allows you to compute the derivative of a composite function. In the context of neural networks, this means you can calculate how changes in weights affect the overall error. By applying the chain rule, you can determine the gradient of the loss function with respect to each weight. This information is crucial for updating the weights in a way that minimizes the error.

Gradient Descent

Gradient descent is the optimization technique used in conjunction with backpropagation. It involves adjusting the weights in the direction that reduces the error. You start by calculating the gradient of the loss function, which indicates the steepest ascent. By moving in the opposite direction, you descend towards the minimum error. This iterative process continues until the network reaches an optimal state. The efficiency and speed of backpropagation make it a popular choice for training neural networks, as highlighted by studies on its memory efficiency and generic applicability.

Adjusting Weights

Adjusting the weights of a neural network is a critical step in the learning process. It ensures that the network can make accurate predictions based on the input data.

Error Minimization

Error minimization is the primary goal of backpropagation. By continuously adjusting the weights, you can reduce the difference between the predicted and actual outcomes. This process involves calculating the error at each layer and propagating it backward through the network. By doing so, you can identify which weights need adjustment to minimize the error. This method has revolutionized machine learning by enabling neural networks to learn from data and improve their performance.

Learning Rate

The learning rate is a crucial parameter in the backpropagation process. It determines the size of the steps taken during the weight adjustment. A high learning rate can lead to rapid convergence but may overshoot the optimal solution. Conversely, a low learning rate ensures more precise adjustments but may slow down the learning process. Finding the right balance is essential for efficient training. The versatility and efficiency of backpropagation, as noted in various studies, make it an indispensable tool for optimizing neural networks.

By mastering these concepts, you can harness the power of backpropagation to train neural networks effectively. This understanding is vital for anyone looking to delve deeper into the world of deep learning and generative AI.

Backpropagation in Deep Learning

Backpropagation in Deep Learning plays a pivotal role in enhancing the capabilities of generative models. By understanding its function, you can appreciate how it contributes to the creation of new and innovative content.

Role in Generative Models

Efficient Gradient Computation

In generative models, efficient gradient computation is crucial. Backpropagation allows you to compute gradients swiftly, which is essential for updating the model's parameters. This process ensures that the model learns effectively from the data. By calculating the gradient of the loss function with respect to each parameter, you can determine the direction and magnitude of updates needed. This efficiency is what makes backpropagation indispensable in training complex generative models.

Parameter Updates

Parameter updates are at the heart of model training. With backpropagation, you can fine-tune the weights of neural network layers based on the error rate. This adjustment process helps minimize errors and improve the model's performance. By iteratively updating the parameters, you ensure that the model becomes more accurate over time. This continuous refinement is what enables generative models to produce high-quality outputs.

Examples in Generative AI

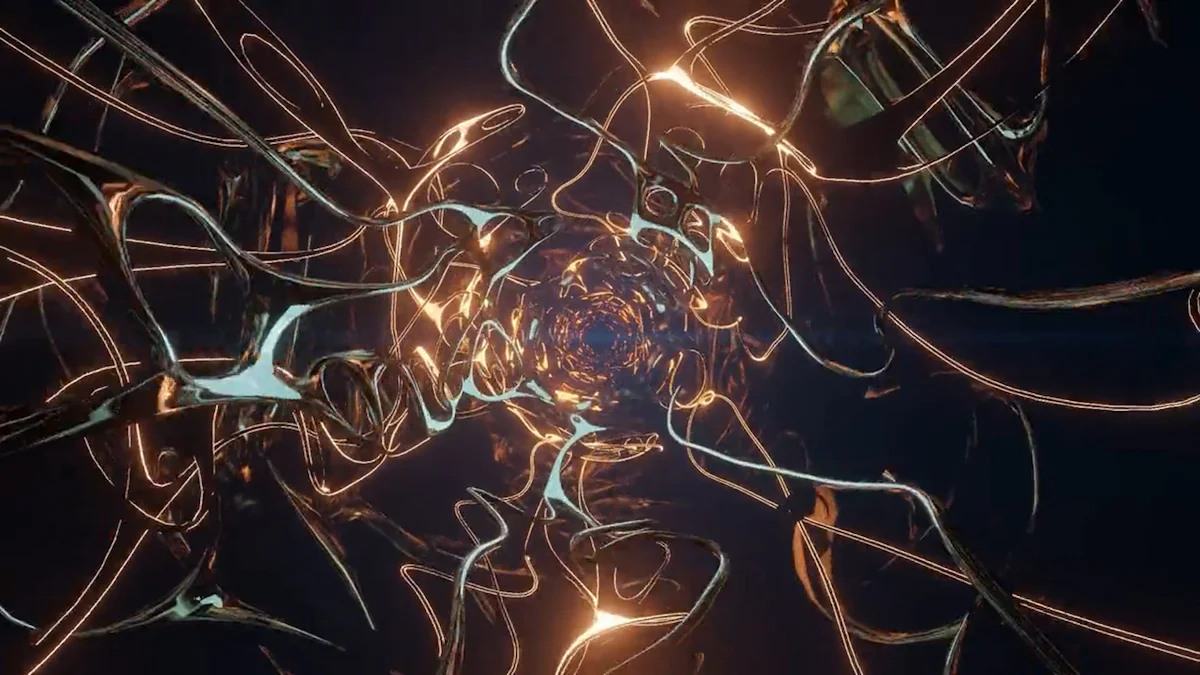

Image Generation

In the realm of image generation, backpropagation is a key component. It allows you to train models that can create realistic images from scratch. By adjusting the model's parameters through backpropagation, you can enhance its ability to generate detailed and lifelike images. This technique is widely used in applications such as creating art, designing virtual environments, and even generating synthetic data for research purposes.

Text Generation

Text generation also benefits significantly from backpropagation. By training models to understand and replicate language patterns, you can generate coherent and contextually relevant text. Backpropagation helps in refining the model's understanding of syntax and semantics, enabling it to produce text that closely resembles human writing. This capability is invaluable in applications like chatbots, content creation, and automated translation services.

Scientific Insight: According to research, backpropagation is essential for training neural networks by fine-tuning weights based on error rate propagation. This process is crucial for optimizing model performance in both image and text generation tasks.

By mastering backpropagation in deep learning, you can unlock the full potential of generative AI. This understanding empowers you to create models that not only learn from data but also generate innovative and valuable content.

Practical Applications

Backpropagation's versatility extends to numerous practical applications, significantly enhancing the capabilities of neural networks in various fields. By understanding these applications, you can appreciate how backpropagation contributes to advancements in technology and artificial intelligence.

Computer Vision

In the realm of computer vision, backpropagation plays a crucial role in enabling machines to interpret and understand visual data. This capability is essential for tasks like image recognition and object detection.

Image Recognition

Image recognition involves identifying and classifying objects within images. You can train neural networks to recognize patterns and features in visual data using backpropagation. This process reduces errors and improves the accuracy of machine responses, making it possible for systems to identify objects with high precision. Applications of image recognition include facial recognition, medical imaging, and automated tagging in social media platforms.

Object Detection

Object detection goes a step further by not only recognizing objects but also locating them within an image. Backpropagation helps refine the model's ability to detect objects accurately by adjusting the network's parameters based on error rates. This capability is vital for applications such as autonomous vehicles, where detecting and responding to obstacles in real-time is crucial. By leveraging backpropagation, you can enhance the performance of object detection systems, ensuring they operate efficiently and reliably.

Natural Language Processing

Natural Language Processing (NLP) benefits significantly from backpropagation, allowing machines to understand and generate human language. This understanding is essential for tasks like sentiment analysis and language translation.

Sentiment Analysis

Sentiment analysis involves determining the emotional tone behind a piece of text. By training neural networks with backpropagation, you can improve their ability to analyze and interpret sentiments accurately. This process involves adjusting the network's weights to minimize errors in sentiment classification. Applications of sentiment analysis include monitoring social media trends, customer feedback analysis, and market research.

Language Translation

Language translation requires understanding and converting text from one language to another. Backpropagation enhances the performance of translation models by fine-tuning their parameters based on error propagation. This adjustment process ensures that translations are accurate and contextually relevant. You can apply this capability in various fields, including international communication, content localization, and real-time translation services.

Scientific Insight: Research highlights the importance of backpropagation in training neural networks, emphasizing its role in reducing errors and improving outcomes in machine responses. This process is crucial for optimizing model performance in both computer vision and natural language processing tasks.

By exploring these practical applications, you can see how backpropagation drives innovation and efficiency in diverse fields. This understanding empowers you to harness the power of neural networks to solve complex problems and create valuable solutions.

Challenges and Considerations

Computational Complexity

Understanding the computational complexity of backpropagation is crucial for optimizing neural networks. This complexity can impact the efficiency and speed of your models.

Resource Requirements

Backpropagation requires significant computational resources. You need powerful hardware to handle large datasets and complex network architectures. The demand for memory and processing power increases as the network's size grows. Efficient resource management becomes essential to ensure smooth operation and prevent bottlenecks. By investing in robust hardware and optimizing your code, you can manage these resource requirements effectively.

Optimization Techniques

To tackle computational complexity, you can employ various optimization techniques. These methods enhance the efficiency of backpropagation, allowing you to train models faster and with less computational strain. Techniques like mini-batch gradient descent and adaptive learning rates help reduce the time and resources needed for training. According to research, the backpropagation algorithm offers advantages such as memory efficiency and speed, making it suitable for different network architectures without requiring extensive parameter tuning. By leveraging these techniques, you can optimize your models and achieve better performance.

Overfitting and Generalization

Overfitting and generalization are critical considerations when training neural networks. Striking the right balance ensures that your models perform well on new, unseen data.

Regularization Methods

Regularization methods help prevent overfitting by adding constraints to the model. Techniques like L1 and L2 regularization introduce penalties for large weights, encouraging simpler models that generalize better. Dropout is another effective method, randomly deactivating neurons during training to prevent reliance on specific features. By incorporating regularization, you can improve your model's ability to generalize and perform well on diverse datasets.

Cross-Validation

Cross-validation is a valuable tool for assessing your model's performance. By dividing your dataset into multiple subsets, you can train and test your model on different data segments. This process provides a more accurate evaluation of your model's ability to generalize. Cross-validation helps identify potential overfitting and guides you in fine-tuning your model for optimal performance. By using this technique, you can ensure that your models are robust and reliable.

Scientific Insight: Research highlights the importance of optimization techniques and regularization methods in managing computational complexity and preventing overfitting. These strategies are crucial for enhancing model performance and ensuring efficient training.

By addressing these challenges and considerations, you can optimize your neural networks for better performance and reliability. This understanding empowers you to create models that are both efficient and effective in solving complex problems.

Backpropagation stands as a cornerstone in the realm of neural networks, revolutionizing machine learning by enabling efficient training of deep models. You have seen how it fine-tunes weights based on error rates, ensuring lower errors and enhancing model reliability. This process mimics the human brain's learning through trial and error, making it fundamental for optimizing artificial neural networks. As you continue your journey in neural networks and deep learning, exploring backpropagation further will deepen your understanding and open new avenues for innovation in generative AI.

See Also

Exploring the Depths of Generative AI Learning

Deciphering Generative AI Model Learning

Neural Networks' Impact on Generative AI