Understanding Latent Spaces in AI: What You Need to Know

Artificial Intelligence (AI) has become a cornerstone of modern technology, transforming industries and enhancing productivity. It encompasses various components, including machine learning and neural networks, which drive its capabilities. Among these components, understanding latent spaces is crucial. Latent spaces represent the hidden dimensions where AI models encode data, capturing essential features and patterns. This understanding allows for more efficient data processing and model development. As AI applications continue to expand, grasping the concept of latent spaces becomes increasingly important for leveraging AI's full potential.

Defining Latent Spaces

What are Latent Spaces?

Conceptual Overview

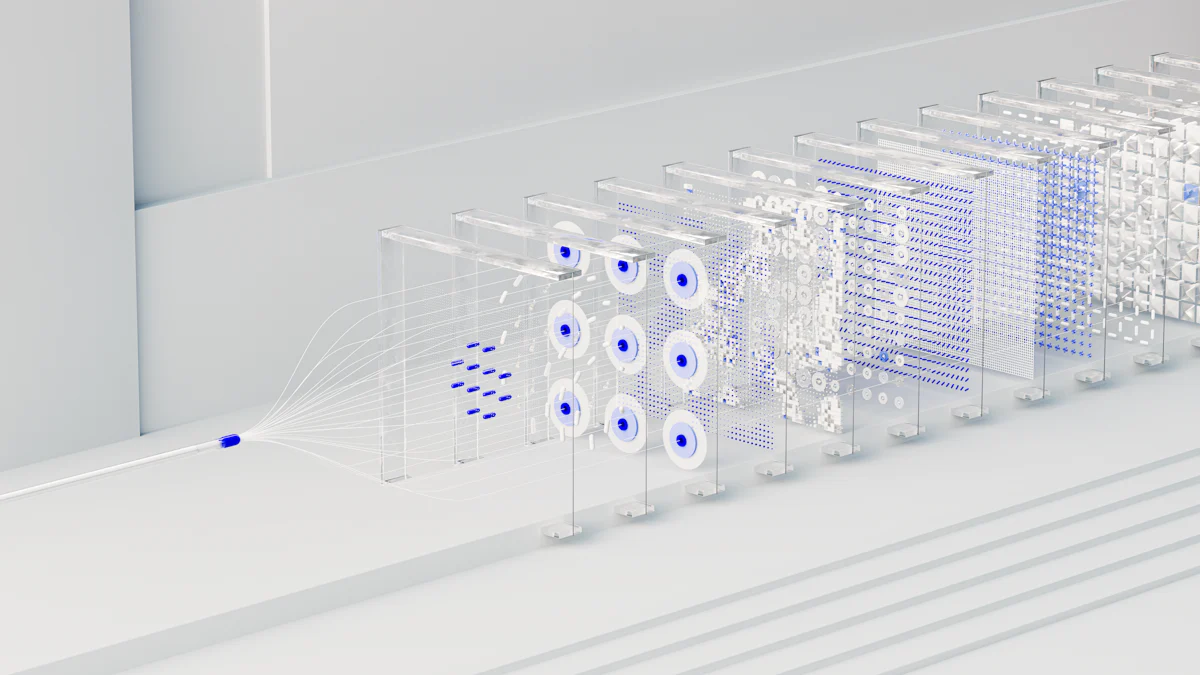

Latent spaces serve as a fundamental concept in artificial intelligence and machine learning. They represent a hidden, multidimensional space where data is encoded in a compressed form. This space captures the essential features and patterns of the input data, allowing AI models to process information more efficiently. By transforming complex data into a simpler representation, latent spaces enable models to understand and manipulate data in ways that are not immediately apparent in the original dataset. This abstraction helps in identifying underlying structures and relationships within the data, which are crucial for various AI applications.

Mathematical Representation

Mathematically, latent spaces can be viewed as a vector space where each point corresponds to a unique set of characteristics derived from the input data. Machine learning algorithms, such as neural networks, learn to map input data to this latent space through training. The process involves adjusting the model's parameters to minimize the difference between the predicted and actual outputs. This mapping allows the model to capture the data's intrinsic properties, facilitating tasks like dimensionality reduction and feature extraction. In essence, latent spaces provide a quantitative framework for representing data in a way that computers can efficiently process and analyze.

Role in AI Models

Data Transformation

In AI models, latent spaces play a pivotal role in data transformation. They enable the conversion of high-dimensional data into a lower-dimensional form, making it easier to handle and analyze. This transformation is particularly useful in scenarios where data complexity poses challenges for traditional processing methods. By reducing dimensionality, latent spaces help in preserving the most relevant information while discarding noise and redundancy. This streamlined representation enhances the model's ability to perform tasks such as classification, clustering, and regression with greater accuracy and efficiency.

Feature Extraction

Feature extraction is another critical function of latent spaces in AI models. By encoding data into a latent space, models can identify and isolate the most significant features that contribute to the desired outcome. This process involves selecting attributes that are most informative and relevant to the task at hand. Latent spaces facilitate this by highlighting patterns and correlations that may not be immediately visible in the raw data. As a result, models can focus on the most pertinent aspects of the data, improving their performance and predictive capabilities. This ability to extract meaningful features is essential for developing robust and effective AI solutions across various domains.

How Latent Spaces Work

Understanding how latent spaces function within AI models requires examining the mechanisms that facilitate their operation. These mechanisms primarily involve neural networks and encoding-decoding processes, which transform and interpret data in meaningful ways.

Mechanisms in Neural Networks

Neural networks utilize latent spaces to process and transform data through various layers and functions.

Hidden Layers

Hidden layers in neural networks play a crucial role in forming latent spaces. Each layer processes input data, extracting features and patterns that contribute to the model's understanding. These layers act as intermediaries, transforming raw data into a structured form within the latent space. By doing so, they enable the network to capture complex relationships and nuances in the data, which are essential for tasks like classification and prediction.

Key Insight: Paths connecting same-class inputs in latent representation space exist, highlighting the structured nature of these spaces and their role in enhancing model explainability and robustness.

Activation Functions

Activation functions determine how neurons in a network respond to inputs, influencing the formation of latent spaces. They introduce non-linearity, allowing the network to learn intricate patterns and relationships. By applying activation functions, neural networks can map input data to a latent space that accurately represents its underlying structure. This mapping is vital for the network's ability to generalize from training data to new, unseen data.

Encoding and Decoding Processes

Encoding and decoding processes are fundamental to how latent spaces operate, particularly in models designed for data compression and generation.

Autoencoders

Autoencoders are neural networks that learn to encode data into a latent space and then decode it back to its original form. The encoder compresses the input data into a lower-dimensional latent space, capturing its essential features. The decoder reconstructs the data from this compressed representation. This process not only reduces dimensionality but also highlights the most significant aspects of the data, making it easier to analyze and interpret.

Functionalities: Latent spaces in autoencoders offer dimensionality reduction and data compression, improving performance and interpretability in AI applications.

Variational Autoencoders

Variational autoencoders (VAEs) extend the concept of autoencoders by introducing a probabilistic approach to encoding data. VAEs map input data to a latent space characterized by probability distributions rather than fixed points. This approach allows for more flexible and robust data generation, as it can produce diverse outputs by sampling from the latent space. VAEs are particularly useful in generative modeling, where they create new data samples that resemble the original dataset.

Challenges: While latent spaces in VAEs enhance generative capabilities, they also pose challenges in interpretation due to their complexity and nonlinearity.

Latent spaces serve as a powerful tool in AI, enabling models to transform, compress, and generate data efficiently. By understanding the mechanisms behind latent spaces, one can appreciate their significance in advancing AI technologies.

Applications of Latent Spaces

Latent spaces play a crucial role in various AI applications, particularly in image processing and natural language processing. By transforming complex data into simpler representations, latent spaces enable models to perform tasks more efficiently and effectively.

Image Processing

In the realm of image processing, latent spaces facilitate several advanced techniques that enhance the way images are handled and manipulated.

Image Compression

Image compression benefits significantly from latent spaces. By reducing the dimensionality of image data, latent spaces allow for efficient storage and transmission without compromising quality. This process involves encoding the image into a latent space where only the essential features are retained. The result is a compressed version of the image that maintains its core characteristics while occupying less space. This technique proves invaluable in scenarios where bandwidth and storage are limited.

Example: JPEG compression utilizes similar principles by transforming images into a frequency domain, highlighting the importance of latent spaces in practical applications.

Style Transfer

Style transfer represents another fascinating application of latent spaces in image processing. This technique involves applying the artistic style of one image to the content of another, creating a unique blend. Latent spaces enable this by capturing the style and content separately, allowing for their recombination in novel ways. The model encodes both images into a latent space, where it isolates style features from content features. By manipulating these features, the model generates an image that combines the desired elements from both sources.

Insight: Style transfer showcases the power of latent spaces in generating creative and visually appealing results, demonstrating their versatility in artistic applications.

Natural Language Processing

Latent spaces also play a pivotal role in natural language processing (NLP), where they enhance the understanding and generation of text.

Text Generation

In text generation, latent spaces help models produce coherent and contextually relevant text. By encoding linguistic patterns and structures into a latent space, models can generate new sentences that reflect the style and tone of the input data. This capability is particularly useful in applications like chatbots and content creation, where generating human-like text is essential. The latent space captures the nuances of language, enabling the model to produce diverse and meaningful outputs.

Application: Generative models like GPT-3 utilize latent spaces to create text that mimics human writing, highlighting their potential in automating content generation.

Sentiment Analysis

Sentiment analysis benefits from latent spaces by improving the accuracy of sentiment detection in text. By encoding text into a latent space, models can identify underlying emotional tones and sentiments more effectively. This process involves mapping words and phrases to a latent space where their semantic relationships are preserved. The model then analyzes these relationships to determine the overall sentiment of the text, whether positive, negative, or neutral.

Functionality: Latent spaces enhance sentiment analysis by capturing subtle emotional cues, making them indispensable in applications like social media monitoring and customer feedback analysis.

Latent spaces serve as a powerful tool in both image and text processing, enabling AI models to perform complex tasks with greater efficiency and accuracy. By understanding and leveraging latent spaces, developers can unlock new possibilities in AI applications, driving innovation across various domains.

Challenges in Understanding Latent Spaces

Latent spaces, while powerful, present several challenges that researchers and developers must address. These challenges primarily revolve around interpretability and computational complexity.

Interpretability Issues

Black Box Nature

Latent spaces often operate within the "black box" nature of AI models. This term refers to the difficulty in understanding how these models make decisions. AI systems encode data into latent spaces, which can obscure the reasoning behind their outputs. This lack of clarity raises concerns, especially in applications where transparency is crucial. For instance, in healthcare or finance, stakeholders need to trust AI decisions. Without clear insights into how latent spaces influence these decisions, users may hesitate to rely on AI systems.

Ethical Consideration: The opacity of AI decision-making processes could become a legal quagmire. Employment tribunals might question AI-driven hiring decisions due to the inability to explain the rationale behind them.

Lack of Transparency

Transparency remains a significant issue with latent spaces. Users often struggle to interpret the transformations and representations within these spaces. This challenge complicates efforts to ensure that AI models act fairly and ethically. Developers must find ways to make latent spaces more understandable. Doing so will help build trust in AI technologies and ensure compliance with ethical standards.

Philosophical Insight: Ethical discussions on interpretability highlight the need for AI systems to be transparent. This transparency is essential for maintaining trust and accountability in AI applications.

Computational Complexity

Resource Intensive

Latent spaces require substantial computational resources. Training models to map data into these spaces demands significant processing power and memory. This requirement can limit the accessibility of AI technologies, particularly for smaller organizations with limited resources. Efficient algorithms and hardware advancements are necessary to mitigate these resource demands.

Key Insight: Organizations must adopt robust security measures and continuously evolve their defenses. This approach safeguards against emerging threats and ensures the efficient use of resources.

Scalability Concerns

Scalability poses another challenge for latent spaces. As datasets grow, the complexity of mapping them into latent spaces increases. This growth can lead to longer processing times and higher costs. Developers must address these scalability issues to ensure that AI models remain effective and efficient as they handle larger volumes of data.

Strategic Approach: Improving scalability involves optimizing algorithms and leveraging advanced hardware. These strategies help manage the increasing demands of larger datasets.

Understanding and addressing these challenges is crucial for advancing the use of latent spaces in AI. By tackling interpretability and computational complexity, developers can enhance the reliability and efficiency of AI models. This progress will enable broader adoption of AI technologies across various industries.

Comparing Latent Spaces with Related Concepts

Latent spaces play a crucial role in artificial intelligence, but they often get compared to other concepts like feature spaces and manifold learning. Understanding these comparisons helps clarify the unique characteristics and applications of latent spaces.

Latent Spaces vs. Feature Spaces

Differences in Representation

Latent spaces and feature spaces both serve as representations of data, yet they differ significantly in their structure and purpose. Latent spaces are lower-dimensional spaces that provide a compressed representation of the original high-dimensional data. They focus on capturing the essential features and patterns, allowing models to process data more efficiently. In contrast, feature spaces represent the space from which data points are drawn. They encompass all possible features that describe the data, often resulting in higher dimensionality.

Key Insight: Latent spaces reduce dimensionality, making data easier to handle, while feature spaces maintain the full complexity of the data.

Use Cases

Latent spaces find their use in tasks that require dimensionality reduction and data compression. They are particularly beneficial in scenarios where simplifying data without losing critical information is essential, such as in image compression or generative modeling. Feature spaces, on the other hand, are used in machine learning models where the complete set of features is necessary for accurate predictions, like in classification tasks.

Example: In a neural network, latent spaces help in encoding data into a simpler form, whereas feature spaces are used to train models with all available data attributes.

Latent Spaces vs. Manifold Learning

Conceptual Differences

While both latent spaces and manifold learning deal with data representation, they approach it differently. Latent spaces provide an abstract, lower-dimensional space that captures the similarities and differences in data. They focus on compressing data into a form that retains its essential characteristics. Manifold learning, however, involves embedding data within a manifold, where similar items are positioned closer together. It relies on latent variables to define the manifold's structure.

Comparative Insight: Latent spaces emphasize data compression, whereas manifold learning focuses on preserving the geometric relationships between data points.

Applications

Latent spaces are widely used in applications that require efficient data transformation and generation, such as autoencoders and variational autoencoders. These models leverage latent spaces to encode and decode data, facilitating tasks like image reconstruction and text generation. Manifold learning, on the other hand, is applied in scenarios where understanding the intrinsic geometry of data is crucial, such as in dimensionality reduction techniques like t-SNE and Isomap.

Application Example: Variational autoencoders use latent spaces to generate new data samples, while manifold learning techniques help visualize complex data structures in a reduced form.

By comparing latent spaces with related concepts like feature spaces and manifold learning, one gains a deeper understanding of their unique roles and applications in AI. This knowledge aids in selecting the appropriate approach for specific tasks, enhancing the effectiveness of AI models.

Future Directions in Latent Space Research

Improving Interpretability

Explainable AI

Researchers aim to enhance the interpretability of latent spaces through Explainable AI (XAI) techniques. These methods strive to make AI models more transparent and understandable. By providing insights into how models process data within latent spaces, XAI helps users trust AI systems. Techniques such as embedding methods, including t-SNE, allow for better visualization of latent spaces. These methods help in understanding the dimensionality and structure of data representations. The MMGN model, which uses implicit neural networks, incorporates explainability methods to improve understanding of latent representations. This approach enhances the contextual information within latent spaces, impacting model performance positively.

Visualization Techniques

Visualization techniques play a crucial role in interpreting latent spaces. They enable users to explore and understand complex data structures interactively. Visual analytics frameworks have been developed to facilitate this exploration. These frameworks allow users to interact with latent spaces, providing a clearer picture of the data's underlying patterns. Clustering and linear or nonlinear methods estimate similarity between latent representations, aiding in visualization. By employing these techniques, researchers can uncover hidden relationships within data, making latent spaces more accessible and comprehensible.

Enhancing Efficiency

Optimization Algorithms

Optimization algorithms are essential for improving the efficiency of latent space operations. These algorithms streamline the process of mapping data into latent spaces, reducing computational demands. By optimizing the parameters of AI models, these algorithms enhance performance and speed. They ensure that models can handle large datasets efficiently, making latent spaces more practical for real-world applications. Researchers continue to develop new optimization techniques to address the growing complexity of AI models and their latent spaces.

Hardware Advancements

Advancements in hardware technology significantly impact the efficiency of latent space research. Modern hardware accelerates the processing of complex computations required for latent space operations. High-performance computing resources, such as GPUs and TPUs, enable faster training and inference of AI models. These advancements reduce the time and cost associated with mapping data into latent spaces. As hardware technology evolves, it will further enhance the capabilities of AI models, making latent spaces more efficient and accessible for various applications.

Practical Implications for AI Development

Impact on AI Model Design

Model Architecture

AI model architecture significantly benefits from understanding latent spaces. By incorporating latent spaces, developers can design models that efficiently process and analyze data. Latent spaces allow models to capture essential features, reducing the complexity of data representation. This simplification leads to more streamlined architectures, which can improve performance and reduce computational costs. For instance, models can identify anomalies by learning typical patterns within the data in the latent space. These anomalies might indicate potential errors or outliers, enhancing the model's robustness.

Training Techniques

Training techniques also evolve with the integration of latent spaces. Models trained with latent spaces can learn more effectively by focusing on the most relevant data features. This focus reduces training time and enhances model accuracy. Developers can employ techniques like dimensionality reduction to simplify data, making it easier for models to learn. Additionally, latent spaces help in identifying data points that deviate from expected patterns, aiding in anomaly detection. This capability proves valuable in applications where detecting outliers or fraudulent activities is crucial.

Influence on AI Applications

Industry Use Cases

Latent spaces have a profound influence on various industry applications. In sectors like finance and healthcare, AI models utilize latent spaces to improve decision-making processes. For example, in finance, models can detect fraudulent transactions by analyzing data patterns in latent spaces. In healthcare, latent spaces help in identifying anomalies in medical data, leading to early diagnosis and treatment. As organizations embrace AI capabilities, such as Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) systems, latent spaces play a pivotal role in enhancing these technologies.

Ethical Considerations

Ethical considerations become increasingly important as AI applications expand. The use of latent spaces raises questions about transparency and fairness in AI decision-making. Developers must ensure that models using latent spaces operate ethically and do not perpetuate biases. Transparency in how latent spaces influence model outputs is essential for building trust with users. Additionally, addressing the hidden security gaps in latent spaces is crucial to prevent potential misuse or exploitation. By prioritizing ethical considerations, developers can create AI systems that are both effective and responsible.

Latent spaces hold immense importance in AI, serving as a foundation for various functionalities such as dimensionality reduction, feature extraction, and generative modeling. They simplify complex data, enhancing the performance and interpretability of machine learning models. As AI continues to evolve, the exploration of latent spaces will likely lead to more efficient and secure applications. Researchers must focus on improving interpretability and addressing security challenges. By doing so, they can unlock latent spaces' full potential, paving the way for innovative advancements in AI technology.

See Also

Insight into Generative AI Deep Learning Concepts

Exploring Generative AI Model Learning Procedures

Essential Generative AI Vocabulary for Professionals