Understanding Overfitting in Generative AI Models

Overfitting in AI happens when a model learns too much from its training data, performing well on it but poorly on new data. This issue can hinder the model's ability to make accurate predictions beyond its initial dataset. Generative AI models, which create new content like images or text, play a significant role in AI advancements. However, they can also suffer from overfitting, producing outputs that are too specific to their training data. Addressing overfitting is crucial to ensure these models generate useful and generalized results, fostering genuine progress in AI research.

The Problem of Overfitting in AI

Overfitting in AI can be a real headache. It happens when your model gets too cozy with the training data, learning every little detail instead of grasping the bigger picture. This section dives into why overfitting occurs and how it affects AI models.

High Model Complexity

Explanation of Model Complexity

Model complexity refers to how intricate your AI model is. Think of it like a maze with many twists and turns. The more complex the model, the more it can capture the nuances of the training data. But there's a catch. If the model becomes too complex, it starts memorizing the data instead of understanding it.

Scientific Research Findings: Studies show that high model complexity can lead to overfitting, where the model memorizes the training data instead of generalizing to new data.

How Complexity Leads to Overfitting

When your model is too complex, it fits the training data like a glove. It learns every quirk and anomaly, which sounds great until you realize it can't handle new data. This is like acing a practice test but failing the real exam. The model struggles to make accurate predictions on unseen data, defeating the purpose of machine learning.

Insufficient Data

Challenges of Limited Data

Limited data is another culprit behind overfitting in AI. Imagine trying to learn a language with only a handful of phrases. You'd struggle to communicate effectively. Similarly, when your model has access to only a small dataset, it can't learn the underlying patterns well. It ends up memorizing the few examples it has, leading to overfitting.

Scientific Research Findings: Overfitting occurs when a model does not accurately capture the underlying structure of the data, fitting the training data too well and not generalizing to new data.

Impact on Model Performance

With insufficient data, your model's performance takes a hit. It might perform brilliantly on the training set but falter when faced with new challenges. This inconsistency makes it unreliable for real-world applications. You want your model to be like a seasoned traveler, ready to adapt to any situation, not just the familiar ones.

Noise in the Data

Definition of Data Noise

Data noise refers to random errors or variations in the dataset. It's like static on a radio, making it hard to hear the music. In the context of AI, noise can confuse the model, leading it to learn patterns that don't actually exist.

Effects of Noise on Model Accuracy

When your model picks up on noise, it starts making decisions based on irrelevant information. This reduces its accuracy and reliability. You want your model to focus on the melody, not the static. By addressing noise, you can help your model make more accurate predictions and avoid the pitfalls of overfitting.

Scientific Research Findings: Too much noisy data affects the model's ability to distinguish relevant information from noise, contributing to overfitting.

Data Leakage

What is Data Leakage?

Data leakage occurs when your model inadvertently gains access to information from the training dataset that it shouldn't have. Imagine you're taking a test, and someone slips you the answers beforehand. That's data leakage in a nutshell. It gives your model an unfair advantage during training, leading it to perform exceptionally well on the training data but stumble when faced with new, unseen data. This sneaky issue can creep in through various channels, such as when test data accidentally gets mixed with training data or when features that should be unavailable during prediction are used.

Scientific Research Findings: Overfitting in AI often stems from models memorizing training data rather than genuinely learning from it. Data leakage exacerbates this by providing extra information that the model shouldn't have, leading to overfitting.

Consequences of Data Leakage in Training

When data leakage occurs, your model becomes like a student who aces practice exams but struggles with real-world tests. It learns patterns that don't exist outside the training environment, resulting in poor generalization to new data. This undermines the very purpose of machine learning, which is to create models that can adapt and perform well in diverse situations.

The consequences of data leakage are significant:

False Confidence: Your model might appear highly accurate during training, giving you a false sense of confidence in its capabilities.

Poor Real-World Performance: Once deployed, the model may fail to deliver reliable results, as it hasn't truly learned the underlying patterns of the data.

Wasted Resources: Time and resources spent on developing and training the model go to waste if it can't perform effectively in real-world applications.

Scientific Research Findings: Overfitting results in high variance models that excel with training data but falter with test data. Data leakage contributes to this undesirable behavior by skewing the model's learning process.

To combat data leakage, you need to ensure strict separation between training and test datasets. Regularly review your data handling processes to prevent accidental leaks. By doing so, you help your model learn genuinely and improve its ability to generalize, ultimately enhancing its performance in real-world scenarios.

Introduction to Generative AI Models

Generative AI models are like the artists of the AI world. They create new content, whether it's images, text, or even music. These models have become a cornerstone in the field of artificial intelligence, pushing the boundaries of what's possible.

Overview of Generative Models

Types of Generative Models

You might wonder what kinds of types of generative models exist. Well, there are several types, each with its unique capabilities:

Generative Adversarial Networks (GANs): These models consist of two parts, a generator and a discriminator, working together to create realistic data.

Variational Autoencoders (VAEs): VAEs are great for generating new data points by learning the underlying distribution of the input data.

Autoregressive Models: These models predict the next data point in a sequence, making them ideal for tasks like text generation.

Each type has its strengths, and choosing the right one depends on your specific needs.

Applications in AI

Generative models have found applications in various fields. In healthcare, they help generate synthetic medical images for research. In entertainment, they create realistic characters and scenes for movies and video games. Even in fashion, designers use them to generate new clothing designs. The possibilities are endless, and these models continue to inspire innovation across industries.

Role of Generative Models in AI

Enhancing Creativity and Innovation

Generative models play a crucial role in enhancing creativity and innovation. They allow you to explore new ideas and concepts without the constraints of traditional methods. Imagine having a tool that can generate countless variations of a design, helping you find the perfect one. That's the power of generative AI.

Case Study: Microsoft AI researchers once accidentally exposed over 38TB of private data while sharing an AI dataset. This incident highlights the importance of securely managing data when using generative models.

Use Cases in Various Industries

Generative models have made their mark in numerous industries:

Healthcare: They assist in creating synthetic data for training medical models, improving diagnostic accuracy.

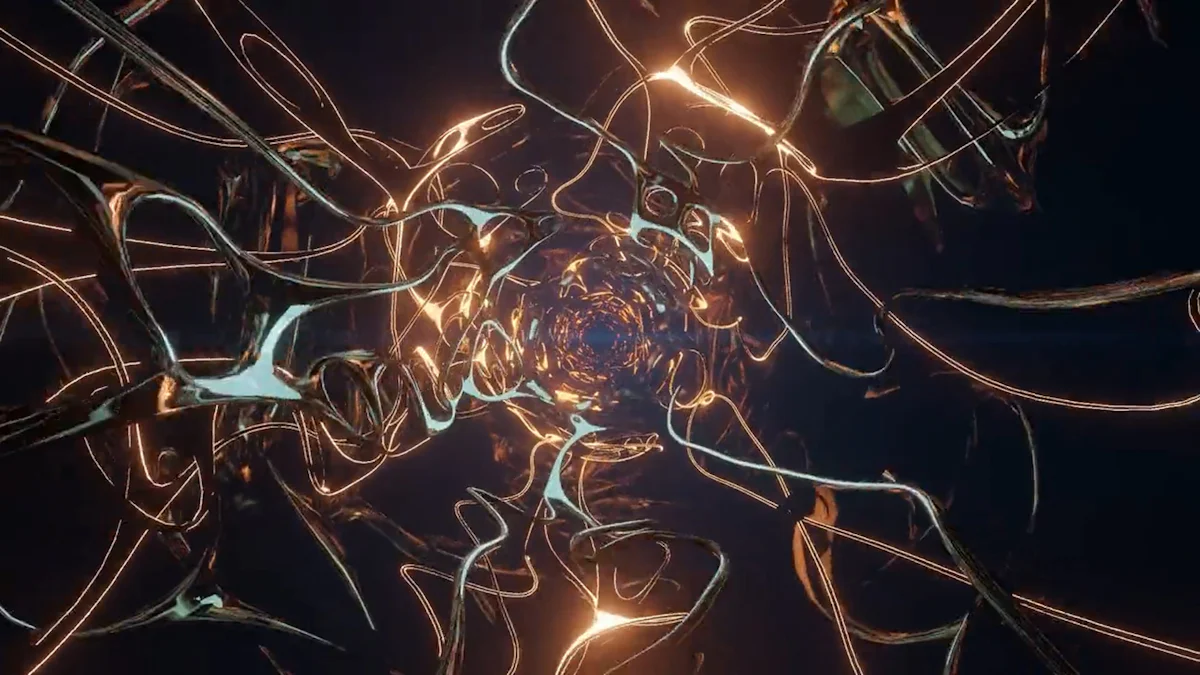

Entertainment: Filmmakers use them to generate realistic special effects and animations.

Fashion: Designers leverage them to create innovative clothing patterns and styles.

These models not only streamline processes but also open up new avenues for creativity and problem-solving. As you explore the world of generative AI, you'll discover how these models can transform your industry and drive progress.

Addressing Overfitting with GANs

Generative Adversarial Networks, or GANs, have emerged as a powerful tool in the fight against overfitting in AI. They offer innovative solutions to some of the challenges faced by generative models. Let's dive into how GANs work and why they're so effective.

What are GANs?

GANs are a type of generative model that consists of two neural networks: a generator and a discriminator. These two networks engage in a game-like scenario where the generator creates data, and the discriminator evaluates it. This dynamic helps GANs produce highly realistic outputs.

Structure and Function of GANs

Generator: This network's job is to create new data instances. It starts with random noise and gradually learns to generate data that resembles the training set.

Discriminator: This network acts as a critic. It evaluates the data produced by the generator and distinguishes between real and fake data. The discriminator's feedback helps the generator improve its outputs.

Scientific Research Findings: GANs are particularly effective in unsupervised learning and representation learning, making them versatile tools for various applications, including image-to-image translation and data augmentation.

Advantages of Using GANs

Realism: GANs excel at producing realistic data, which is crucial for applications like video game development and content creation.

Versatility: They can be used across different domains, from healthcare to entertainment, due to their ability to handle diverse data types.

Innovation: By generating synthetic data, GANs open up new possibilities for creativity and problem-solving.

GANs for Synthetic Data Generation

One of the standout features of GANs is their ability to generate synthetic data. This capability is invaluable for training AI models, especially when real-world data is scarce or sensitive.

Process of Generating Synthetic Data

Training: The generator learns from a dataset, creating new data points that mimic the original data.

Evaluation: The discriminator assesses these data points, providing feedback to refine the generator's output.

Iteration: This process repeats, with the generator improving its ability to produce realistic data over time.

Scientific Research Findings: In healthcare, GANs have been used to generate synthetic medical images, aiding in AI model training and improving diagnostic accuracy.

Benefits of Synthetic Data in Training

Data Augmentation: Synthetic data increases the diversity of training datasets, helping models generalize better and reducing the risk of overfitting.

Privacy: By using synthetic data, you can protect sensitive information while still training effective models.

Cost-Effectiveness: Generating synthetic data can be more cost-effective than collecting and labeling large amounts of real-world data.

Incorporating GANs into your AI projects can significantly enhance model performance and reliability. By addressing overfitting and expanding the possibilities of data generation, GANs pave the way for more robust and innovative AI solutions.

Increasing Dataset Size and Diversity

Importance of Diverse Datasets

How diversity improves model robustness

You might wonder why dataset diversity matters so much. Well, think of it like this: a diverse dataset is like a well-rounded education. It prepares your AI model to handle a wide range of scenarios. When your dataset includes various examples, your model learns to recognize patterns that are common across different situations. This makes it more robust and less likely to overfit.

A diverse dataset helps your model generalize better. It can adapt to new data more effectively, just like a student who excels in different subjects. By exposing your model to a variety of data points, you ensure it doesn't get stuck memorizing specific details. Instead, it learns to understand the bigger picture.

Strategies for increasing dataset diversity

So, how do you increase dataset diversity? Here are some strategies you can try:

Collect Data from Multiple Sources: Gather data from different environments, demographics, or conditions. This broadens the range of examples your model encounters.

Use Synthetic Data: Generate synthetic data using techniques like GANs. This can fill gaps in your dataset and introduce new variations.

Incorporate Data from Different Time Periods: Include data from various time frames to capture changes and trends over time.

By implementing these strategies, you can create a more diverse dataset that enhances your model's performance and reduces the risk of overfitting.

Techniques for Expanding Datasets

Data augmentation methods

Data augmentation is like giving your model a workout. It involves creating new data points by modifying existing ones. This process helps your model become more flexible and adaptable. Here are some common data augmentation methods:

Rotation and Flipping: Rotate or flip images to create new perspectives.

Scaling and Cropping: Adjust the size or crop parts of images to introduce variety.

Adding Noise: Introduce random noise to data to make your model more resilient to variations.

These techniques expand your dataset without the need for additional data collection. They help your model learn from a wider range of examples, improving its ability to generalize.

Leveraging GANs for dataset expansion

Generative Adversarial Networks (GANs) offer a powerful way to expand your dataset. They can generate new, synthetic data that supplements your original dataset. This increases both the size and diversity of your data, which is crucial for reducing overfitting.

Scientific Research Findings: GANs can improve data quality, reduce human bias, and generate diverse data. They create realistic data that enhances model training and performance.

By leveraging GANs, you can create a more comprehensive dataset that covers a broader spectrum of scenarios. This not only improves your model's robustness but also opens up new possibilities for innovation and creativity.

Evaluating Model Performance

Understanding how well your model performs is crucial in the world of AI. You want to ensure that your model not only fits the training data but also generalizes well to new data. Let's explore how you can assess and validate your model's performance effectively.

Metrics for Assessing Overfitting

Common Evaluation Metrics

When evaluating your model, you need to focus on specific metrics that can reveal overfitting. Here are some common ones:

Accuracy: Measures how often the model makes correct predictions. While high accuracy on training data might seem promising, it could indicate overfitting if the test accuracy is much lower.

Loss: Represents the error in predictions. A decreasing loss during training but increasing loss on validation data suggests overfitting.

Precision and Recall: These metrics help you understand the balance between false positives and false negatives, providing deeper insights into model performance.

Tip: Keep an eye on both training and validation metrics. A significant gap between them often signals overfitting.

Interpreting Results to Identify Overfitting

Interpreting these metrics requires a keen eye. If your model performs exceptionally well on training data but poorly on validation data, it's likely overfitting. You want to see consistent performance across both datasets.

Insight: Diverse datasets can enhance model robustness and accuracy, reducing the risk of overfitting. When your model encounters varied examples, it learns to generalize better.

Techniques for Model Validation

Cross-Validation Methods

Cross-validation is a powerful technique to ensure your model's reliability. It involves splitting your data into multiple subsets and training the model on different combinations. This helps you assess how well your model performs across various data segments.

K-Fold Cross-Validation: Divides the dataset into 'k' subsets. The model trains on 'k-1' subsets and validates on the remaining one. This process repeats 'k' times, providing a comprehensive evaluation.

Leave-One-Out Cross-Validation: Uses all data points except one for training, testing on the single data point. It's thorough but computationally intensive.

Scientific Insight: Cross-validation methods offer a more accurate picture of model performance compared to traditional methods, which might not capture the full variability of the data.

Importance of Validation in Model Training

Validation plays a pivotal role in model training. It helps you fine-tune your model, ensuring it doesn't just memorize the training data. By validating your model, you gain confidence in its ability to handle new data effectively.

Key Takeaway: Regular validation prevents overfitting and ensures your model remains adaptable. It acts as a checkpoint, guiding you to make necessary adjustments for optimal performance.

Incorporating these evaluation and validation techniques into your AI projects will enhance your model's reliability and effectiveness. By focusing on diverse datasets and robust validation methods, you pave the way for successful AI implementations.

Case Studies and Examples

Real-world Applications of GANs

Generative Adversarial Networks (GANs) have made waves across various industries. They offer innovative solutions and have been instrumental in achieving remarkable results.

Success stories in various fields

Healthcare: GANs have revolutionized medical imaging. They generate synthetic images that help train diagnostic models. This approach enhances accuracy without compromising patient privacy. For instance, researchers have used GANs to create realistic MRI scans, aiding in the development of better diagnostic tools.

Entertainment: In the world of movies and video games, GANs bring characters and scenes to life. They generate realistic animations and special effects, reducing production time and costs. Studios have leveraged GANs to create stunning visuals that captivate audiences.

Fashion: Designers use GANs to explore new clothing patterns and styles. By generating countless design variations, GANs inspire creativity and innovation. This technology allows designers to experiment with bold ideas without the constraints of traditional methods.

Scientific Research Findings: Using GANs to Reduce Overfitting highlights how GANs generate synthetic data, which is crucial for training models in fields like healthcare and entertainment.

Lessons learned from practical implementations

Adaptability: GANs have shown that adaptability is key. By generating diverse data, they help models generalize better, reducing the risk of overfitting.

Innovation: The creative potential of GANs is limitless. They encourage industries to think outside the box and explore new possibilities.

Efficiency: GANs streamline processes, saving time and resources. They allow industries to achieve more with less, driving progress and efficiency.

Overfitting Mitigation in Practice

Overfitting remains a challenge in AI, but leading tech companies have developed strategies to tackle it effectively.

Strategies used by leading tech companies

Data Augmentation: Companies like Google and Facebook use Data Augmentation to expand their datasets. By introducing variations, they ensure models learn from diverse examples, enhancing robustness.

Regularization Techniques: Regularization helps simplify models, preventing them from becoming too complex. This approach reduces overfitting by encouraging models to focus on essential patterns.

Cross-Validation: K-fold cross-validation is a popular method among tech giants. It provides a comprehensive evaluation of model performance, ensuring reliability across different data segments.

Scientific Research Findings: Detection of Overfitting in AI/ML Models emphasizes the importance of cross-validation and model simplification in reducing overfitting.

Insights from industry experts

Continuous Evaluation: Experts stress the need for continuous model evaluation. By regularly testing models on new data, you can identify overfitting early and make necessary adjustments.

Feature Selection: Selecting the right features is crucial. It ensures models focus on relevant information, improving accuracy and reducing overfitting risks.

Ensemble Methods: Combining multiple models can enhance performance. Ensemble methods leverage the strengths of different models, providing a more robust solution.

Scientific Research Findings: Methods to Detect and Prevent Overfitting highlight techniques like early stopping, feature selection, and ensemble methods as effective strategies to combat overfitting.

By learning from these real-world applications and expert insights, you can implement effective strategies to mitigate overfitting in your AI projects. Embrace the power of GANs and other innovative techniques to drive success and innovation in your field.

Future Directions in Generative AI

Emerging Trends

Innovations in Generative Model Research

Generative AI continues to evolve, with researchers pushing the boundaries of what's possible. You might wonder what new innovations are on the horizon. Well, one exciting area is the development of more efficient algorithms. These algorithms aim to reduce the computational power needed for training models, making generative AI more accessible to everyone.

Another trend is the integration of generative models with other AI technologies. By combining different AI approaches, you can create more powerful and versatile systems. For example, integrating generative models with reinforcement learning can lead to smarter decision-making processes.

Case Study: Using GANs to Address Overfitting in Machine Learning Models highlights how GANs generate synthetic data, which helps train models on larger and more diverse datasets. This approach reduces overfitting and enhances model performance.

Potential Future Applications

The potential applications of generative AI are vast and varied. In the future, you might see these models being used to create personalized content tailored to individual preferences. Imagine having a virtual assistant that generates custom music playlists or designs unique clothing styles just for you.

Generative AI could also revolutionize industries like architecture and urban planning. By generating realistic 3D models of buildings and cities, these models can help architects and planners visualize and optimize their designs.

Challenges and Opportunities

Addressing Ethical Concerns

As generative AI advances, ethical concerns become more prominent. You need to consider issues like data privacy and the potential misuse of AI-generated content. Ensuring that generative models are used responsibly is crucial for maintaining trust and integrity in AI applications.

One way to address these concerns is by implementing strict guidelines and regulations. By setting clear boundaries, you can prevent the misuse of generative AI and protect individuals' rights.

Opportunities for Further Research

Despite the challenges, generative AI offers numerous opportunities for further research. You can explore ways to improve model interpretability, making it easier to understand how these models generate content. This transparency can help build trust and confidence in AI systems.

Another area ripe for exploration is the development of more robust evaluation metrics. By creating better ways to assess model performance, you can ensure that generative AI continues to produce high-quality and reliable outputs.

In conclusion, the future of generative AI holds immense promise. By staying informed about emerging trends and addressing ethical concerns, you can harness the power of these models to drive innovation and create positive change in various fields.

Addressing overfitting in generative AI models is crucial for ensuring they produce reliable and generalized outputs. You play a vital role in this process by understanding the significance of tackling overfitting. Generative Adversarial Networks (GANs) offer a powerful solution, helping you mitigate overfitting by generating diverse synthetic data. As you explore this field, consider the ethical challenges and opportunities for innovation. By staying informed and engaged, you can contribute to the responsible development of generative AI, enhancing user experiences across various industries. Keep pushing the boundaries of what's possible with generative AI.

See Also

Insight into How Generative AI Models Learn

Reasons for Bias in Generative AI Models

Tips for Minimizing Bias in Generative AI Models